new posts in all blogs

Viewing: Blog Posts Tagged with: us, Most Recent at Top [Help]

Results 1 - 25 of 147

How to use this Page

You are viewing the most recent posts tagged with the words: us in the JacketFlap blog reader. What is a tag? Think of a tag as a keyword or category label. Tags can both help you find posts on JacketFlap.com as well as provide an easy way for you to "remember" and classify posts for later recall. Try adding a tag yourself by clicking "Add a tag" below a post's header. Scroll down through the list of Recent Posts in the left column and click on a post title that sounds interesting. You can view all posts from a specific blog by clicking the Blog name in the right column, or you can click a 'More Posts from this Blog' link in any individual post.

By: Katherine Soroya,

on 2/25/2015

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Books,

democracy,

Us,

inequality,

society,

industrial revolution,

population,

equality,

interest groups,

lobby,

Social Sciences,

*Featured,

Business & Economics,

redistribution,

social mobility,

Vuk Vukovic,

Add a tag

We are by now more or less aware that income inequality in the US and in most of the rich OECD world is higher today than it was some 30 to 40 years ago. Despite varying interpretations of what led to this increase, the fact remains that inequality is exhibiting a persistent increase, which is robust to both expansionary and contractionary economic times. One might even say that it became a stylized fact of the developed world (amid some worthy exceptions). The question on everyone's lips is how can a democracy result in rising inequality?

The post Inequality in democracies: interest groups and redistribution appeared first on OUPblog.

Two best friends wake up and start the day.

By: Jerry Beck,

on 4/25/2014

Blog:

Cartoon Brew

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Nate Milton,

Animated Fragments,

2veinte,

Lei Lei,

Jenn Strickland,

Ross Butter,

Shaz Lym,

Canada,

US,

China,

UK,

Argentina,

Add a tag

Animated Fragments is our semi-regular feature of animation tests, experiments, micro-shorts, and other bits of cartoon flotsam that doesn't fit into other categories. To view the previous 25 installments, go to the Fragments archive.

By: Jerry Beck,

on 8/18/2013

Blog:

Cartoon Brew

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Chile,

US,

Japan,

UK,

Spain,

Iran,

Animated Fragments,

Mehdi Alibeygi,

AllaKinda,

Estudio Pintamonos,

Max Halley,

Sarah Airriess,

Yukie Nakauchi,

Add a tag

Interesting animation is being produced everywhere you look nowadays. This evening, we’re delighted to present animated fragments from six different countries: Chile, Iran, UK, US, Japan and Spain. For more, visit the Animated Fragments archive.

“Lollypop Man—The Escape” (work-in-progress) by Estudio Pintamonos (Chile)

“Bazar” by Mehdi Alibeygi (Iran)

“Time” by Max Halley (UK)

Hand-drawn development animation for Wreck-It Ralph to “explore animation possibilities before [Gene's] model and rig were finalised” by Sarah Airriess (US)

“Rithm loops” for an iPhone/iPad app by AllaKinda (Spain)

“Against” by Yukie Nakauchi (Japan)

By: Jerry Beck,

on 5/12/2013

Blog:

Cartoon Brew

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Music Videos,

Poland,

The Netherlands,

Binary,

Fit,

Iron and Wine,

Hayley Morris,

Eric Deuel,

Job Joris & Marieke,

Nicos Livesey,

Renata Gąsiorowska,

Spencer Day,

Tom Bunker,

US,

UK,

Add a tag

Our semi-regular roundup of interesting, creative and original animated music videos.

Music video for Alphabets Heaven.

“The Mystery of You” directed by Eric Deuel (US)

Music video for Spencer Day.

“Been Too Long” (“Duurt te Lang”) directed by Job, Joris & Marieke (The Netherlands)

Music video for Fit

Music video for Binary.

Lead Animators (2D & 3D): Blanca Martinez de Rituerto & Joe Sparrow

Secondary 2D Animation: Andy Baker, Tom Bunker, Nicos Livesey

Music video for Iron and Wine

Behind-the-scenes video HERE

Director/Animator: Hayley Morris

Fabricators: Hayley Morris, Denise Hauser and Randy Bretzin

Color Correction: Evan Kultangwatana

Model for watercolor animation: Louise Sheldon

By: Jerry Beck,

on 3/23/2013

Blog:

Cartoon Brew

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Music Videos,

Belgium,

Spain,

Mark Borgions,

Lucas Borras,

Matt Christensen,

Tom Jobbins,

US,

UK,

Add a tag

Our weekly roundup of the most interesting, creative and original animated music videos.

Music video for The Middle Eight. Go to Matt’s website for a behind-the-scenes photo album.

“We Can Be Ghosts Now” directed by Tom Jobbins (UK)

Music video for Hiatus feat. Shura.

Art Director: John Jobe Reynolds

Cinematographer: Matthias Pilz

Colorist: Danny Atkinson

Compositor: Jonathan Topf

Editor:Robert Mila

“Magdalena” directed by Lucas Borras (Spain/US)

Music video for Quantic & Alice Russell.

“Separated” directed by Mark Borgions (Belgium)

Music video for Stan Lee Cole.

By: Jerry Beck,

on 3/11/2013

Blog:

Cartoon Brew

(

Login to Add to MyJacketFlap)

JacketFlap tags:

US,

UK,

Australia,

Music Videos,

Poland,

Darcy Prendergast,

Tim McCourt,

Wesley Louis,

Oh Yeah Wow,

Katarzyna Kijek,

Alan Foreman,

Przemysław Adamski,

Seamus Spilsbury,

Add a tag

The weekend is almost over, but it’s not too late to check out these quality animated music videos that have recently come to my attention.

“Katachi” directed by Kijek/Adamski (Poland)

Music video for Shugo Tokumaru. Watch the making-of video.

“the light that died in my arms” directed by Alan Foreman (US)

Music video for Ten Minute Turns. Song written and recorded by Alan Foreman and Roger Paul Mason

Music video for Mat Zo and Porter Robinson. Credits:

Directed By Louis & McCourt

Art Direction by Bjorn Aschim

Animators: Jonathan ‘Djob’ Nkondo, James Duveen, Sam Taylor, Wesley Louis, Tim McCourt

Backgrounds and Layouts: Bjorn Aschim, Mike Shorten

Compositing: Sam Taylor, Jonathan Topf

3D VFX Directing by Jonathan Topf

Graphic Design by Hisako Nakagawa

Producers: Jack Newman, Drew O’Neill

Produced by Bullion

“Time to Go” directed by Darcy Prendergast and Seamus Spilsbury (Australia)

Music video for Wax Tailor produced by Oh Yeah Wow. Credits:

Animators: Sam Lewis, Mike Greaney, Seamus Spilsbury, Darcy Prendergast

VFX supervisor: Josh Thomas

Assistant animators: Alexandra Calisto de Carvalho, Joel Williams

Compositors: Josh Thomas, Jeremy Blode, James Bailey, Alexandra Calisto de Carvalho, Keith Crawford, Dan Steen

Crotchet sculptor: Julie Ramsden

Colour grade: Dan Stonehouse, Crayon

By: Alice,

on 2/24/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

black history month,

woodson,

African American Studies,

african,

strived,

United States,

Abraham Lincoln,

leffler,

fredrick douglass,

American National Biography,

fredrick,

African Americans,

*Featured,

luncheon,

Online Products,

ANB,

African American lives,

Dr Carter Woodson,

abernathy,

attachment_35720,

History,

US,

Add a tag

February marks a month of remembrance for Black History in the United States. It is a time to reflect on the events that have enabled freedom and equality for African Americans, and a time to celebrate the achievements and contributions they have made to the nation.

Dr Carter Woodson, an advocate for black history studies, initially created “Negro History Week” between the birthdays of two great men who strived to influence the lives of African Americans: Fredrick Douglass and Abraham Lincoln. This celebration was then expanded to the month of February and became Black History Month. Find out more about important African American lives with our quiz.

Rev. Ralph David Abernathy speaks at Nat’l. Press Club luncheon. Photo by Warren K. Leffler. 1968. Library of Congress.

Your Score:

Your Ranking:

The landmark American National Biography offers portraits of more than 18,700 men & women — from all eras and walks of life — whose lives have shaped the nation. The American National Biography is the first biographical resource of this scope to be published in more than sixty years.

Subscribe to the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

The post African American lives appeared first on OUPblog.

By: KimberlyH,

on 2/19/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Music,

History,

US,

avant-garde,

Jazz,

African American Studies,

swing,

Louis Armstrong,

John Coltrane,

coltrane,

AASC,

duke ellington,

bebop,

Miles Davis,

*Featured,

Arts & Leisure,

kenny,

Online Products,

Oxford African American Studies Center,

Oxford AASC,

African American National Biography,

Art Farmer,

Benny Carter,

Charlie Parker,

Don Byron,

Ernestine Anderson,

Ernie Andrews,

free jazz,

hard bop,

Harry “Sweets” Edison,

Jon Hendricks,

Kenny Barron,

Kenny Dorham,

Roy Hargrove,

Scott Yanow,

barron,

yanow,

Add a tag

By Scott Yanow

When I was approached by the good folks at Oxford University Press to write some entries on jazz artists, I noticed that while the biggest names (Louis Armstrong, Duke Ellington, Charlie Parker, Miles Davis, John Coltrane, etc.) were already covered, many other artists were also deserving of entries. There were several qualities that I looked for in musicians before suggesting that they be written about. Each musician had to have a distinctive sound (always a prerequisite before any artist is considered a significant jazz musician), a strong body of work, and recordings that sound enjoyable today. It did not matter if the musician’s prime was in the 1920s or today. If their recordings still sounded good, they were eligible to be given prestigious entries in the African American National Biography.

Some of the entries included in the February update to the Oxford African American Studies Center are veteran singers Ernestine Anderson, Ernie Andrews, and Jon Hendricks; trumpet legends Harry “Sweets” Edison, Kenny Dorham, and Art Farmer; and a few giants of today, including pianist Kenny Barron, trumpeter Roy Hargrove, and clarinetist Don Byron.

In each case, in addition to including the musicians’ basic biographical information, key associations, and recordings, I have included a few sentences that place each artist in their historic perspective, talking about how they fit into their era, describing their style, and discussing their accomplishments. Some musicians had only a brief but important prime period, but there is a surprising number of artists whose careers lasted over 50 years. In the case of Benny Carter, the alto saxophonist/arranger was in his musical prime for a remarkable 70 years, still sounding great when he retired after his 90th birthday.

Jazz, whether from 90 years ago or today, has always overflowed with exciting talents. While jazz history books often simplify events, making it seem as if there were only a handful of giants, the number of jazz greats is actually in the hundreds. There was more to the 1920s than Louis Armstrong, more to the swing era than Benny Goodman and Glenn Miller, and more to the classic bebop era than Charlie Parker and Dizzy Gillespie. For example, while Duke Ellington is justly celebrated, during the 49 years that he led his orchestra, he often had as many as ten major soloists in his band at one time, all of whom had colorful and interesting lives.

Because jazz has had such a rich history, it is easy for reference books and encyclopedias to overlook the very viable scene of today. The music did not stop with the death of John Coltrane in 1967 or the end of the fusion years in the late 1970s. Because the evolution of jazz was so rapid between 1920 and 1980, continuing in almost a straight line as the music became freer and more advanced, it is easy (but inaccurate) to say that the music has not continued evolving. What has happened during the past 35 years is that instead of developing in one basic way, the music evolved in a number of directions. The music world became smaller and many artists utilized aspects of World and folk music to create new types of “fusions.” Some musicians explored earlier styles in creative ways, ranging from 1920s jazz to hard bop. The avant-garde or free jazz scene introduced many new musicians, often on small label releases. And some of the most adventurous players combined elements of past styles — such as utilizing plunger mutes on horns or engaging in collective improvisations — to create something altogether new.

While many veteran listeners might call one period or another jazz’s “golden age,” the truth is that the music has been in its prime since around 1920 (when records became more widely available) and is still in its golden age today. While jazz deserves a much larger audience, there is no shortage of creative young musicians of all styles and approaches on the scene today. The future of jazz is quite bright and the African American National Biography’s many entries on jazz greats reflect that optimism.

Scott Yanow is the author of eleven books on jazz, including The Great Jazz Guitarists, The Jazz Singers, Trumpet Kings, Jazz On Record 1917-76, and Jazz On Film.

The Oxford African American Studies Center combines the authority of carefully edited reference works with sophisticated technology to create the most comprehensive collection of scholarship available online to focus on the lives and events which have shaped African American and African history and culture. It provides students, scholars and librarians with more than 10,000 articles by top scholars in the field.

Subscribe to the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

Image Credit: Kenny Barron 2001, Munich/Germany. Photo by Sven.petersen, public domain via Wikimedia Commons

The post Jazz lives in the African American National Biography appeared first on OUPblog.

By: Alice,

on 2/18/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Almost A Miracle,

War of Independence,

jensen,

merrill,

flexner,

including independence,

1793,

readable,

History,

US,

revolution,

"John Ferling",

"Presidents Day",

ferling,

*Featured,

Add a tag

By John Ferling

Picking out five books on the founding of the nation, and its leaders, is not an easy task. I could easily have listed twenty-five that were important to me. But here goes:

Merrill Jensen, The Founding of a Nation: A History of the American Revolution, 1763-1776 (New York, Oxford University Press, 1968)

This book remains the best single volume history of the American Revolution through the Declaration of Independence. This isn’t flag waving, but a warts and all treatment in which Jensen demonstrates that many of the now revered Founders feared and resisted the insurgency that led to American independence.

Merrill Jensen, The American Revolution Within America (New York, New York University Press, 1974)

Obviously I admire the work of Merrill Jensen. Lectures delivered to university audiences quite often are not especially readable, but this collection of three talks that he delivered at New York University is a wonderful read. Jensen pulls no punches. He shows what some Founders sought to gain from the Revolution and what others hoped to prevent, and he makes clear that those who wished (“conspired” might be a better word) to stop the political and social changes unleashed by the American Revolution were in the forefront of those who wrote and ratified the US Constitution.

Gordon Wood, The Radicalism of the American Revolution (New York, Knopf, 1992)

While this book is far from a complete history of the American Revolution (and it never pretended to be), it chronicles how America was changed by the Revolution. I think the first eighty or so pages were among the best ever written in detailing how people thought and behaved prior to the American Revolution. I always asked the students in my introductory US History survey classes to read that section of the book.

James Thomas Flexner, George Washington and the New Nation, 1783-1793 (Boston, Little Brown, 1970) and George Washington: Anguish and Farewell, 1793-1799 (Boston, Little Brown, 1972)

Alright, I cheated. There are two books here, bringing my total number of books to six. Flexner was a popular writer who produced a wonderful four volume life of Washington in the 1960s and 1970s. These two volumes chronicle Washington following the War of Independence, and they offer a rich and highly readable account of Washington’s presidency and the nearly three years left to him following his years as chief executive.

Peter Onuf, ed., Jeffersonian Legacies (Charlottesville, University Press of Virginia, 1993)

This collection of fifteen original essays by assorted scholars scrutinizes the nooks and crannies of Thomas Jefferson’s life and thought. As in any such collection, some essays are better than others, but on the whole this is a good starting point for understanding Jefferson and what scholars have thought of him. Though these essays were published twenty years ago, most remain surprisingly fresh and modern.

John Ferling is Professor Emeritus of History at the University of West Georgia. He is a leading authority on late 18th and early 19th century American history. His new book, Jefferson and Hamilton: The Rivalry that Forged a Nation, will be published in October. He is the author of many books, including Independence, The Ascent of George Washington, Almost a Miracle, Setting the World Ablaze, and A Leap in the Dark. He lives in Atlanta, Georgia.

John Ferling is Professor Emeritus of History at the University of West Georgia. He is a leading authority on late 18th and early 19th century American history. His new book, Jefferson and Hamilton: The Rivalry that Forged a Nation, will be published in October. He is the author of many books, including Independence, The Ascent of George Washington, Almost a Miracle, Setting the World Ablaze, and A Leap in the Dark. He lives in Atlanta, Georgia.

Subscribe to the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

The post Five books for Presidents Day appeared first on OUPblog.

By: Alice,

on 2/18/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Forgotten Presidents,

James Monroe,

Michael J. Gerhardt,

Untold Constitutional Legacy,

gerhardt,

History,

US,

Barack Obama,

coolidge,

Grover Cleveland,

monroe,

*Featured,

Calvin Coolidge,

Add a tag

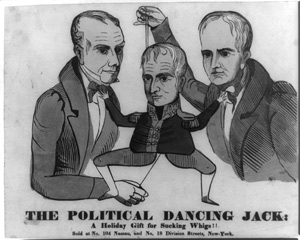

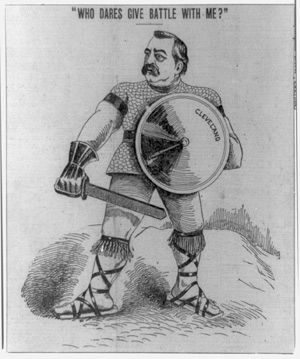

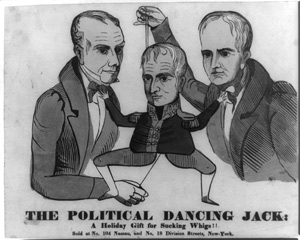

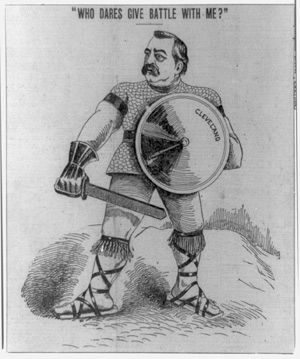

By Michael J. Gerhardt

If you think that Barack Obama can only learn how to build a lasting legacy from our most revered presidents like Abraham Lincoln, you should think twice. I am sure that Obama knows what great presidents did that made them great. He can also learn, however, from some once popular presidents who are now forgotten because they made mistakes or circumstances that helped to bury their legacies.

Enduring presidential legacies require presidents to do things and express constitutional visions that stand the test of time. To be lasting, presidential legacies need to inspire subsequent presidents and generations to build on them. Without such inspiration and investment, legacies are lost and eventually forgotten.

Consider, for example, James Monroe who was the only man besides Obama to be the third president in a row to be reelected. Once wildly popular, he is now largely forgotten. His first term was known as the era of good feelings because it coincided with the demise of any viable opposition party. When he was reelected in 1820, he won every electoral vote but one. Yet, most Americans know nothing about his presidency except perhaps the “Monroe Doctrine” supporting American intervention to protect the Americas from European interference. The doctrine endures because subsequent presidents have adhered to it.

Consider, for example, James Monroe who was the only man besides Obama to be the third president in a row to be reelected. Once wildly popular, he is now largely forgotten. His first term was known as the era of good feelings because it coincided with the demise of any viable opposition party. When he was reelected in 1820, he won every electoral vote but one. Yet, most Americans know nothing about his presidency except perhaps the “Monroe Doctrine” supporting American intervention to protect the Americas from European interference. The doctrine endures because subsequent presidents have adhered to it.

Monroe’s record is largely forgotten for three reasons: First, his legislative achievements eroded over time. He authorized two of the most significant laws enacted in the nineteenth century — the Missouri Compromise, restricting slavery in the Missouri territory, and the Tenure in Office Act, which restricted the terms of certain executive branch officials. But, subsequent presidents differed over both laws’ constitutionality and tried to repeal or amend them. Eventually, the Supreme Court struck them both down.

Second, Monroe had no distinctive vision of the presidency or Constitution. He entered office as the fourth and last member of the Virginia dynasty of presidents. He had nothing to offer that could match the vision and stature of his three predecessors from Virginia — Washington, Jefferson, and Madison. Even with no opposition party, he was unsure where to lead the country. His last two years in office were so fractious, they became known as the era of bad feelings.

Third, Monroe had no close political ally to follow him in office. While he had been his mentor Madison’s logical successor, Monroe had no natural heir. Subsequent presidents, including John Quincy Adams who had been his Secretary of State, felt little fidelity to his legacy.

If President Obama wants to avoid Monroe’s mistakes, he must plan for the future. He should consider whom he would like to follow him and which of his legislative initiatives Republicans might support. If Obama stands on the sidelines in the next election or fails to produce significant bipartisan achievements in his second term, he risks having his successor(s) bury his legacy.

Grover Cleveland, another two-term president, is more forgotten than Monroe. If he is remembered at all, it is as the only man to serve two, non-consecutive terms as president. He was the only Democrat elected in the second half of the nineteenth century and the only president other than Franklin Roosevelt to have won most of the popular vote in three consecutive presidential elections.

Grover Cleveland, another two-term president, is more forgotten than Monroe. If he is remembered at all, it is as the only man to serve two, non-consecutive terms as president. He was the only Democrat elected in the second half of the nineteenth century and the only president other than Franklin Roosevelt to have won most of the popular vote in three consecutive presidential elections.

Yet, Cleveland’s record is forgotten because he blocked rather than built things. He devoted his first term to vetoing laws he thought favored special interests. He cast more vetoes than any president except for FDR, and in his second term the Senate retaliated against his efforts to remove executive officials to create vacancies to fill by stalling hundreds of his nominations.

Cleveland successfully appealed to the American people to break the impasse with the Senate, but his constant clashes with Congress took their toll. In his second term, his disdain for Congress, bullying its members to do what he wanted, and stubbornness prevented him from reaching any meaningful accord to deal with the worst economic downturn before the Great Depression of the 1930s. While Cleveland resisted building bridges to Republicans in Congress, Obama still has time to build some.

Finally, Calvin Coolidge had the vision and rhetoric required for an enduring legacy, but his results failed the test of time. He was virtually unknown when he became Republican Warren Harding’s Vice-President. But, when Harding died, Coolidge inherited a scandal-ridden administration. He worked methodically with Congress to root out the corruption in the administration, established regular press briefings, and easily won the 1924 presidential election Over the next four years, he signed the most significant federal disaster relief bill until Hurricane Katrina and the first federal regulations of broadcasting and aviation. He supported establishing the World Court and the Kellogg-Briand Pact, which outlawed war.

Coolidge’s vision had wide appeal. His conviction that the business of America was business still resonates among many Republicans, but he quickly squandered his good will with the Republican-led Senate when, shortly after his inauguration in 1925, he insisted on re-nominating Charles Warren as Attorney General after it had rejected his nomination. Coolidge could have easily won reelection, but he lost interest in politics after his son died in 1924. He did not help his Secretary of Commerce Herbert Hoover win the presidency in 1928 and said nothing as the economy lapsed into the Great Depression. His penchant for silence, for which he was widely ridiculed, and the failures of his international initiatives and economic policies destroyed his legacy.

As Obama enters his second term, he cannot stand above the fray like Monroe and Coolidge. He must lead the nation through it. He must work with Congress rather than become mired in squabbles with it as did Cleveland, whose contempt for Congress and limited vision made grand bargains impossible. On many issues, including gay rights and solving the debt ceiling, President Obama’s detachment has allowed him to be perceived as having been led rather than leading. He still has a chance to lead through his words and actions and define his legacy as something more than his having been the first African-American elected president or the controversy over the individual mandate in the Affordable Care Act. Unlike forgotten presidents, he still has the means to construct a legacy Americans will value and remember, but to avoid their fates he must use them — now.

Michael Gerhardt is Samuel Ashe Distinguished Professor of Constitutional Law at the University of North Carolina, Chapel Hill. A nationally recognized authority on constitutional conflicts, he has testified in several Supreme Court confirmation hearings, and has published several books, including The Forgotten Presidents: Their Untold Constitutional Legacy.

Subscribe to the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

Image credit: Images from The Forgotten Presidents.

The post The presidents that time forgot appeared first on OUPblog.

By: Alice,

on 2/17/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

18th Amendment,

Blaine Act,

Oxford Encyclopedia of Food and Drink in America,

effigy,

editor andrew,

food,

History,

US,

nypl,

prohibition,

blaine,

Food & Drink,

hung,

*Featured,

andrew f. smith,

repeal,

Arts & Leisure,

Add a tag

Old Man Prohibition hung in effigy from a flagpole as New York celebrated the advent Repeal after years of bootleg booze. Source: NYPL.

How much do you know about the era of Prohibition, when gangsters rose to power and bathtub gin became a staple? 2013 marks the 80th anniversary of the repeal of the wildly unpopular 18th Amendment, initiated on 17 February 1933 when the Blaine Act passed the United States Senate. To celebrate, test your knowledge with this quiz below, filled with tidbits of 1920s trivia gleaned from

The Oxford Encyclopedia of Food and Drink in America: Second Edition.

Your Score:

Your Ranking:

The second edition of The Oxford Encyclopedia of Food and Drink in America thoroughly updates the original, award-winning title, while capturing the shifting American perspective on food and ensuring that this title is the most authoritative, current reference work on American cuisine. Editor Andrew F. Smith teaches culinary hist ory and professional food writing at The New School University in Manhattan. He serves as a consultant to several food television productions (airing on the History Channel and the Food Network), and is the General Editor for the University of Illinois Press’ Food Series. He has written several books on food, including The Tomato in America, Pure Ketchup, and Popped Culture: A Social History of Popcorn in America. The Oxford Encyclopedia of Food and Drink is also available on Oxford Reference.

Subscribe to the OUPblog via email or RSS.

Subscribe to only food and drink articles on the OUPblog via email or RSS.

The post A quiz on Prohibition appeared first on OUPblog.

By: JonathanK,

on 2/15/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Europe,

World War Two,

monastery,

*Featured,

bombing,

Peter Caddick-Adams,

Monte Cassino,

Ten Armies in Hell,

aircraft struck cassino,

titanic matches on the mountain,

History,

US,

Oxford,

military history,

Military,

Add a tag

On the 15th of February 1944, Allied planes bombed the abbey at Monte Cassino as part of an extended campaign against the Italians. St. Benedict of Nursia established his first monastery, the source of the Benedictine Order, here around 529. Over four months, the Battle of Monte Cassino would inflict some 200,000 causalities and rank as one of the most horrific battles of World War Two. This excerpt from Peter Caddick-Adams’s Monte Cassino: Ten Armies in Hell, recounts the bombing.

On the afternoon of 14 February, Allied artillery shells scattered leaflets containing a printed warning in Italian and English of the abbey’s impending destruction. These were produced by the same US Fifth Army propaganda unit that normally peddled surrender leaflets and devised psychological warfare messages. The monks negotiated a safe passage through the German lines for 16 February — too late, as it turned out. American Harold Bond, of the 36th Texan Division, remembered the texture of the ‘honey-coloured Travertine stone’ of the abbey that fine Tuesday morning, and how ‘the Germans seemed to sense that something important was about to happen for they were strangely quiet’. Journalist Christopher Buckley wrote of ‘the cold blue on that late winter morning’ as formations of Flying Fortresses ‘flew in perfect formation with that arrogant dignity which distinguishes bomber aircraft as they set out upon a sortie’. John Buckeridge of 1/Royal Sussex, up on Snakeshead, recalled his surprise as the air filled with the drone of engines and waves of silver bombers, the sun glinting off their bellies, hove into view. His surprise turned to concern when he saw their bomb doors open — as far as his battalion was concerned the raid was not due for at least another day.

Brigadier Lovett of 7th Indian Brigade was furious at the lack of warning: ‘I was called on the blower and told that the bombers would be over in fifteen minutes… even as I spoke the roar [of aircraft] drowned my voice as the first shower of eggs [bombs] came down.’ At the HQ of the 4/16th Punjabis, the adjutant wrote: ‘We went to the door of the command post and gazed up… There we saw the white trails of many high-level bombers. Our first thought was that they were the enemy. Then somebody said, “Flying Fortresses.” There followed the whistle, swish and blast as the first flights struck at the monastery.’ The first formation released their cargo over the abbey. ‘We could see them fall, looking at this distance like little black stones, and then the ground all around us shook with gigantic shocks as they exploded,’ wrote Harold Bond. ‘Where the abbey had been there was only a huge cloud of smoke and dust which concealed the entire hilltop.’

The aircraft which committed the deed came from the massive resources of the US Fifteenth and Twelfth Air Forces (3,876 planes, including transports and those of the RAF in theatre), whose heavy and medium bombardment wings were based predominantly on two dozen temporary airstrips around Foggia in southern Italy (by comparison, a Luftwaffe return of aircraft numbers in Italy on 31 January revealed 474 fighters, bombers and reconnaissance aircraft in theatre, of which 224 were serviceable). Less than an hour’s flying time from Cassino, the Foggia airfields were primitive, mostly grass affairs, covered with Pierced Steel Planking runways, with all offices, accommodation and other facilities under canvas, or quickly constructed out of wood. In mid-winter the buildings and tents were wet and freezing, and often the runways were swamped with oceans of mud which inhibited flying. Among the personnel stationed there was Joseph Heller, whose famous novel Catch-22 was based on the surreal no-win-situation chaos of Heller’s 488th Bombardment Squadron, 340th Bomb Group, Twelfth Air Force, with whom he flew sixty combat missions as a bombardier (bomb-aimer) in B-25 Mitchells.

After the first wave of aircraft struck Cassino monastery, a Sikh company of 4/16th Punjabis fell back, understandably, and a German wireless message was heard to announce: ‘Indian troops with turbans are retiring’. Bond and his friends were astonished when, ‘now and again, between the waves of bombers, a wind would blow the smoke away, and to our surprise we saw the gigantic walls of the abbey still stood’. Captain Rupert Clarke, Alexander’s ADC, was watching with his boss. ‘Alex and I were lying out on the ground about 3,000 yards from Cassino. As I watched the bombers, I saw bomb doors open and bombs began to fall well short of the target.’ Back at the 4/16th Punjabis, ‘almost before the ground ceased to shake the telephones were ringing. One of our companies was within 300 yards of the target and the others within 800 yards; all had received a plastering and were asking questions with some asperity.’ Later, when a formation of B-25 medium bombers passed over, Buckley noticed, ‘a bright flame, such as a giant might have produced by striking titanic matches on the mountain-side, spurted swiftly upwards at half a dozen points. Then a pillar of smoke 500 feet high broke upwards into the blue. For nearly five minutes it hung around the building, thinning gradually upwards.’

Nila Kantan of the Royal Indian Army Service Corps was no longer driving trucks, as no vehicles could get up to the 4th Indian Division’s positions overlooking the abbey, so he found himself portering instead. ‘On our shoulders we carried all the things up the hill; the gradient was one in three, and we had to go almost on all fours. I was watching from our hill as all the bombers went in and unloaded their bombs; soon after, our guns blasted the hill, and ruined the monastery.’ For Harold Bond, the end was the strangest, ‘then nothing happened. The smoke and dust slowly drifted away, showing the crumbled masonry with fragments of walls still standing, and men in their foxholes talked with each other about the show they had just seen, but the battlefield remained relatively quiet.’

The abbey had been literally ruined, not obliterated as Freyberg had required, and was now one vast mountain of rubble with many walls still remaining up to a height of forty or more feet, resembling the ‘dead teeth’ General John K. Cannon of the USAAF wanted to remove; ironically those of the north-west corner (the future target of all ground assaults through the hills) remained intact. These the Germans, sheltering from the smaller bombs, immediately occupied and turned into excellent defensive positions, ready to slaughter the 4th Indian Division when they belatedly attacked. As Brigadier Kippenberger observed: ‘Whatever had been the position before, there was no doubt that the enemy was now entitled to garrison the ruins, the breaches in the fifteen-foot-thick walls were nowhere complete, and we wondered whether we had gained anything.’

Peter Caddick-Adams is a Lecturer in Military and Security Studies at the United Kingdom’s Defence Academy, and author of Monte Cassino: Ten Armies in Hell and Monty and Rommel: Parallel Lives. He holds the rank of major in the British Territorial Army and has served with U.S. forces in Bosnia, Iraq, and Afghanistan.

Subscribe to the OUPblog via email or RSS.

Subscribe to only history articles on the OUPblog via email or RSS.

Image credits: (1) Source: U.S. Air Force; (2) Bundesarchiv, Bild 146-2005-0004 / Wittke / CC-BY-SA; (3) Bundesarchiv, Bild 183-J26131 / Enz / CC-BY-SA

The post The bombing of Monte Cassino appeared first on OUPblog.

By: Alice,

on 2/11/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

linus,

symmetry,

dorothy,

*Featured,

marjorie,

Science & Medicine,

Cultures of Science,

cyclol controversy,

Dorothy Wrinch,

I Died for Beauty,

Marjorie Senechal,

science of crystals,

wrinch,

senechal,

pauling,

cyclol,

inseparably,

History,

Biography,

US,

Add a tag

Remembered today for her much publicized feud with Linus Pauling over the shape of proteins, known as “the cyclol controversy,” Dorothy Wrinch made essential contributions to the fields of Darwinism, probability and statistics, quantum mechanics, x-ray diffraction, and computer science. The first women to receive a doctor of science degree from Oxford University, her understanding of the science of crystals and the ever-changing notion of symmetry has been fundamental to science.

We sat down with Marjorie Senechal, author of I Died for Beauty: Dorothy Wrinch and the Cultures of Science, to explore the life of this brilliant and controversial figure.

Who was Dorothy Wrinch?

Dorothy Wrinch was a British mathematician and a student of Bertrand Russell. An exuberant, exasperating personality, she knew no boundaries, academic or otherwise. She sowed fertile seeds in many fields of science — philosophy, mathematics, seismology, probability, genetics, protein chemistry, crystallography.

What is she remembered for?

Unfortunately, she’s mainly remembered for her battle with the chemist Linus Pauling. Dorothy proposed the first-ever model for protein architecture, provoking a world-class controversy in scientific circles. Linus led her opponents; few noticed that his arguments were as wrong as her model was.

Why did he attack her research and career with such ferocity?

In those days before scientific imaging, scientists imagined. Outsized personalities, fierce ambitions, and cultural misunderstandings also played a role. And gender bias: Dorothy didn’t know her place. She didn’t suffer critics gratefully, or fools gladly. On a deeper level, the fight was philosophical. Imagination and experiment, beauty and truth are entangled inseparably, then and now.

Linus won two Nobel prizes. What became of Dorothy?

Dorothy, a single mother, came to the United States with her daughter at the beginning of World War II, and eventually settled in Massachusetts; she taught at Smith College for many years and wrote scientific books and papers. But her career never recovered. I wrote this book to find out why.

Marjorie Senechal is the Louise Wolff Kahn Professor Emerita in Mathematics and History of Science and Technology, Smith College, and Co-Editor of The Mathematical Intelligencer. She is the author of I Died for Beauty: Dorothy Wrinch and the Cultures of Science.

Subscribe to the OUPblog via email or RSS.

Subscribe to only science and medicine articles on the OUPblog via email or RSS.

The post Who was Dorothy Wrinch? appeared first on OUPblog.

By: Alice,

on 2/5/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

watson,

vimeo,

Editor's Picks,

*Featured,

smuggling,

ssrc,

Brown University,

Colombian cocaine,

How Illicit Trade Made America,

Mexican workers,

Peter Andreas,

Smuggler Nation,

Watson Institute for International Studies,

andreas,

illicit,

smuggler,

56937394,

History,

US,

Videos,

Multimedia,

Add a tag

Today America is the world’s leading anti-smuggling crusader. While honorable, that title is also an ironic one when you consider America’s very close history of… smuggling. Our illicit imports have ranged from West Indies molasses and Dutch gunpowder in the 18th century, to British industrial technologies and African slaves in the 19th century, to French condoms and Canadian booze in the early 20th century, to Mexican workers and Colombian cocaine in the modern era. Simply put, America was built by smugglers.

In this video from Brown University’s Watson Institute for International Studies, Peter Andreas, author of Smuggler Nation: How Illicit Trade Made America, explains America’s long relationship with smuggling and illicit trade.

Click here to view the embedded video.

Peter Andreas is a professor in the Department of Political Science and the Watson Institute for International Studies at Brown University. He was previously an Academy Scholar at Harvard University, a Research Fellow at the Brookings Institution, and an SSRC-MacArthur Foundation Fellow on International Peace and Security. Andreas has written numerous books, including Smuggler Nation: How Illicit Trade Made America, published widely in scholarly journals and policy magazines, presented Congressional testimony, written op-eds for major newspapers, and provided frequent media commentary.

Subscribe to the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

The post A history of smuggling in America appeared first on OUPblog.

“Cirrus” directed by Cyriak (UK)

“Yamasuki Yamazaki” directed by Shishi Yamazaki (Japan)

“Tourniquet” directed by Jordan Bruner (US)

Music Video for Hem. Animated by Greg Lytle and Jordan Bruner.

By: JonathanK,

on 2/2/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

History,

US,

Oxford,

mexico,

Current Affairs,

immigration,

Geography,

border,

Berlin Wall,

Tijuana,

*Featured,

Mexican-American War,

Guadalupe Hidalgo,

Michael Dear,

Polk,

Third Nation,

nafta,

Add a tag

By Michael Dear

Not long ago, I passed a roadside sign in New Mexico which read: “Es una frontera, no una barrera / It’s a border, not a barrier.” This got me thinking about the nature of the international boundary line separating the US from Mexico. The sign’s message seemed accurate, but what exactly did it mean?

On 2 February 1848, a ‘Treaty of Peace, Friendship, Limits and Settlement’ was signed at Guadalupe Hidalgo, thus terminating the Mexican-American War. The conflict was ostensibly about securing the boundary of the recently-annexed state of Texas, but it was clear from the outset that US President Polk’s ambition was territorial expansion. As consequences of the Treaty, Mexico gained peace and $15 million, but eventually lost one-half of its territory; the US achieved the largest land grab in its history through a war that many (including Ulysses S. Grant) regarded as dishonorable.

In recent years, I’ve traveled the entire length of the 2,000-mile US-Mexico border many times, on both sides. There are so many unexpected and inspiring places! Mutual interdependence has always been the hallmark of cross-border communities. Border people are staunchly independent and composed of many cultures with mixed loyalties. They get along perfectly well with people on the other side, but remain distrustful of far-distant national capitals. The border states are among the fastest-growing regions in both countries — places of economic dynamism, teeming contradiction, and vibrant political and cultural change.

A small fence separates densely populated Tijuana, Mexico, right, from the United States in the Border Patrol’s San Diego Sector.

Yet the border is also a place of enormous tension associated with undocumented migration and drug wars. Neither of these problems has its source in the borderlands, but border communities are where the burdens of enforcement are geographically concentrated. It’s because of our country’s obsession with security, immigration, and drugs that after 9/11 the US built massive fortifications between the two nations, and in so doing, threatened the well-being of cross-border communities.

I call the spaces between Mexico and the US a ‘third nation.’ It’s not a sovereign state, I realize, but it contains many of the elements that would otherwise warrant that title, such as a shared identity, common history, and joint traditions. Border dwellers on both sides readily assert that they have more in common with each other than with their host nations. People describe themselves as ‘transborder citizens.’ One man who crossed daily, living and working on both sides, told me: “I forget which side of the border I’m on.” The boundary line is a connective membrane, not a separation. It’s easy to reimagine these bi-national communities as a ‘third nation’ slotted snugly in the space between two countries. (The existing Tohono O’Odham Indian Nation already extends across the borderline in the states of Arizona and Sonora.)

But there is more to the third nation than a cognitive awareness. Both sides are also deeply connected through trade, family, leisure, shopping, culture, and legal connections. Border-dwellers’ lives are intimately connected by their everyday material lives, and buttressed by innumerable formal and informal institutional arrangements (NAFTA, for example, as well as water and environmental conservation agreements). Continuity and connectivity across the border line existed for centuries before the border was put in place, even back to the Spanish colonial era and prehistoric Mesoamerican times.

Do the new fortifications built by the US government since 9/11 pose a threat to the well-being of borderland communities? Certainly there’s been interruptions to cross-border lives: crossing times have increased; the number of US Border Patrol ‘boots on ground’ has doubled; and a new ‘gulag’ of detention centers has been instituted to apprehend, prosecute and deport all undocumented migrants. But trade has continued to increase, and cross-border lives are undiminished. US governments are opening up new and expanded border crossing facilities (known as ports of entry) at record levels. Gas prices in Mexican border towns are tied to the cost of gasoline on the other side. The third nation is essential to the prosperity of both countries.

So yes, the roadside sign in New Mexico was correct. The line between Mexico and the US is a border in the geopolitical sense, but it is submerged by communities that do not regard it as a barrier to centuries-old cross-border intercourse. The international boundary line is only just over a century-and-a-half old. Historically, there was no barrier; and the border is not a barrier nowadays.

The walls between Mexico and the US will come down. Walls always do. The Berlin Wall was torn down virtually overnight, its fragments sold as souvenirs of a calamitous Cold War. The Great Wall of China was transformed into a global tourist attraction. Left untended, the US-Mexico Wall will collapse under the combined assault of avid recyclers, souvenir hunters, and local residents offended by its mere presence.

As the US prepares once again to consider immigration reform, let the focus this time be on immigration and integration. The framers of the Treaty of Guadalupe Hidalgo were charged with making the US-Mexico border, but on this anniversary of the Treaty’s signing, we may best honor the past by exploring a future when the border no longer exists. Learning from the lives of cross-border communities in the third nation would be an appropriate place to begin.

Michael Dear is a professor in the College of Environmental Design at the University of California, Berkeley, and author of Why Walls Won’t Work: Repairing the US-Mexico Divide (Oxford University Press).

Subscribe to the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

The post “Third Nation” along the US-Mexico border appeared first on OUPblog.

By: ErinF,

on 2/2/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

History,

US,

groundhog day,

Sociology,

groundhog,

Pennsylvania,

Multimedia,

jefferson,

census,

Research Tools,

imbolc,

punxsutawney phil,

Social Sciences,

*Featured,

candlemas,

punxsutawney,

social explorer,

Sydney Beveridge,

hibernating,

demography,

Online Products,

Jefferson County,

‘groundhog,

examining census,

our mapping,

Add a tag

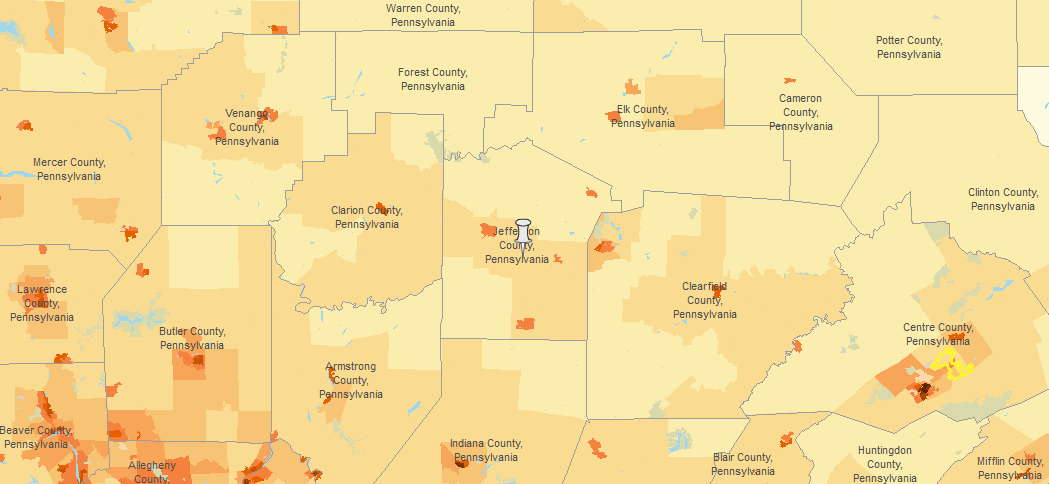

In the United States, a German belief about the badger (applied in Switzerland to the wolf) has been transferred to the woodchuck, better known as the groundhog: on Candlemas he breaks his hibernation in order to observe the weather; if he can see his shadow he returns to his slumbers for six weeks, but if it rains he stays up and about, since winter will soon be over. This has earned Candlemas the name of ‘Groundhog Day’. In Quarryville, Lancaster County, Pa., a Slumbering Groundhog Lodge was formed, whose members, wearing silk hats and carrying canes, went out in search of a groundhog burrow; on finding one they watched its inhabitant’s conduct and reported back. Of twenty observations recorded, eight prognostications proved true, seven false, and five were indeterminate. The ritual is now carried on at Punxsutawney, Pa., where the weather prophet has been named Punxsutawney Phil. (The Oxford Companion to the Year)

By Sydney Beveridge

Every February Second, people across Pennsylvania and the world look to a famous rodent to answer the question—when will spring come?

For over 120 years, Punxsutawney Phil Soweby (Punxsutawney Phil for short) has offered his predictions, based on whether he sees his shadow (more winter) or not (an early spring).

The first official Groundhog Day celebration took place in 1887 and Phil has gone on to star in a blockbuster film, dominate the early February news cycle, and even appear on Oprah. (He also has his own Beanie Baby and his own flower.)

In addition to weather predictions, Phil also loves data, and while people think he is hibernating, he is actually conducting demographic analysis. As a Social Explorer subscriber, he used the site’s mapping and reporting tools to look at the composition of his hometown.

Click here to view the embedded video.

Punxsutawney, PA, located outside of Pittsburgh, is part of Jefferson County. Examining Census data from 1890, Phil learned that the population was 44,405 around the time of his first predictions. While the rest of the nation was becoming more urban, Jefferson County remained more rural with only one eighth of the population living in places with 2,500 people or more (compared to nearly half statewide and more than a third in the US).

Many Jefferson residents worked in the farming industry. Back then, there were 3.2 families for every farm in Jefferson County — higher than the rest of the state with 5.0 families per farm.

Less than three decades after the Civil War, the county (located in a northern state) was 99.9 percent white, which was a little higher than statewide (97.9 percent) and also higher than nationwide 87.8 percent. (The Census also noted that there was one Chinese resident of Jefferson County in 1890.)

Groundhog Day was originally called Candlemas, a day that Germans said the hibernating groundhog took a break from slumbering to check the weather. (According to the Oxford Companion to the Year.) If the creature sees its shadow, and is frightened, winter will hold on and hibernating will continue, but if not, the groundhog will stay awake and spring will come early. Back in 1890, there were 703 Germans living in Jefferson County (representing 1.6 percent of the county population and 11.3 percent of the foreign born), making Germany the fourth most common foreign born place of birth behind England, Scotland, and Austria. Groundhog Day is also said to be Celtic in its roots, so perhaps the 623 Irish residents (representing 1.4 percent of the county population and 10.1 percent of the foreign born) helped to establish the tradition in Pennsylvania.

Looking to today’s numbers, Phil was astonished to learn from the 2010 Census that Jefferson County has just 795 more people than it did 120 years ago. While Jefferson grew by 1.8 percent, the state grew by 141.6 percent and the nation grew by 393.0 percent.

2010 Census Jefferson County, PA, Population Density (click to explore)

Phil dug deeper. The 2008-10 American Community Survey data reveal that the once-prominent farming industry had shrunk considerably. (Because it is a small group, “agriculture” is now grouped with other industries including forestry, fishing and hunting, and mining.) While Jefferson residents are more likely to work in the industry than other Pennsylvanians, that share represents just 4.4 percent of the employed civilian workforce.

According to the Census, Jefferson is still predominately white (98.3 percent), while the rest of the state and nation have become somewhat more diverse (81.9 percent white in Pennsylvania and 72.4 percent nationwide). Today there are 24 Chinese residents (out of a total of 92 Asian residents).

As Phil rises from his burrow this February second, he will survey the shadows with new insight into his community and audience. To learn more about Punxsutawney Phil’s hometown burrow (and your own borough), please visit our mapping and reporting tools.

Sydney Beveridge is the Media and Content Editor for Social Explorer, where she works on the blog, curriculum materials, how-to-videos, social media outreach, presentations and strategic planning. She is a graduate of Swarthmore College and the Columbia University Graduate School of Journalism. A version of this article originally appeared on the Social Explorer blog. You can use Social Explorer’s mapping and reporting tools to investigate dreams, freedoms, and equality further.

Social Explorer is an online research tool designed to provide quick and easy access to current and historical census data and demographic information. The easy-to-use web interface lets users create maps and reports to better illustrate, analyze and understand demography and social change. From research libraries to classrooms to the front page of the New York Times, Social Explorer is helping people engage with society and science.

Subscribe to the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

The post Burrowing into Punxsutawney Phil’s hometown data appeared first on OUPblog.

By: Alice,

on 2/1/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

History,

US,

Latino,

latina,

mosaic,

oral history,

Digital Age,

*Featured,

oxford journals,

Oral History Review,

Caitlin Tyler-Richards,

digital scholarship,

Freedom Mosaic,

oral historians,

VOCES Oral History Project,

voces,

Add a tag

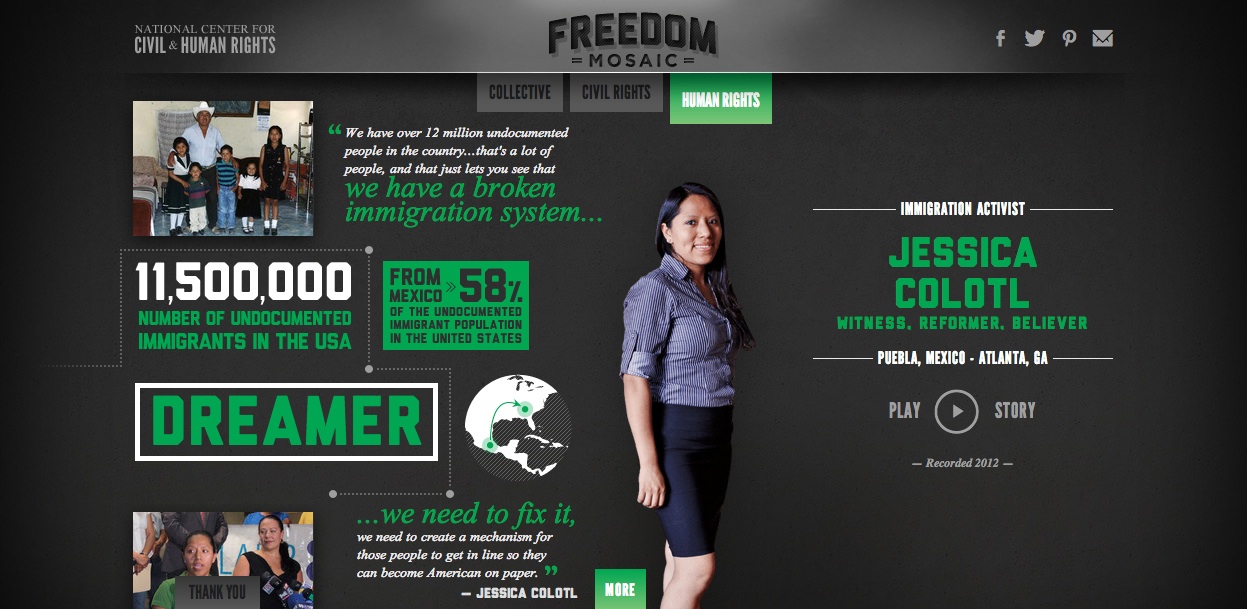

By Caitlin Tyler-Richards

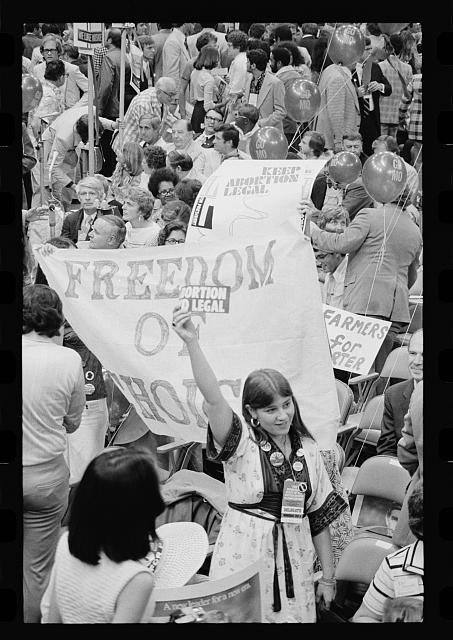

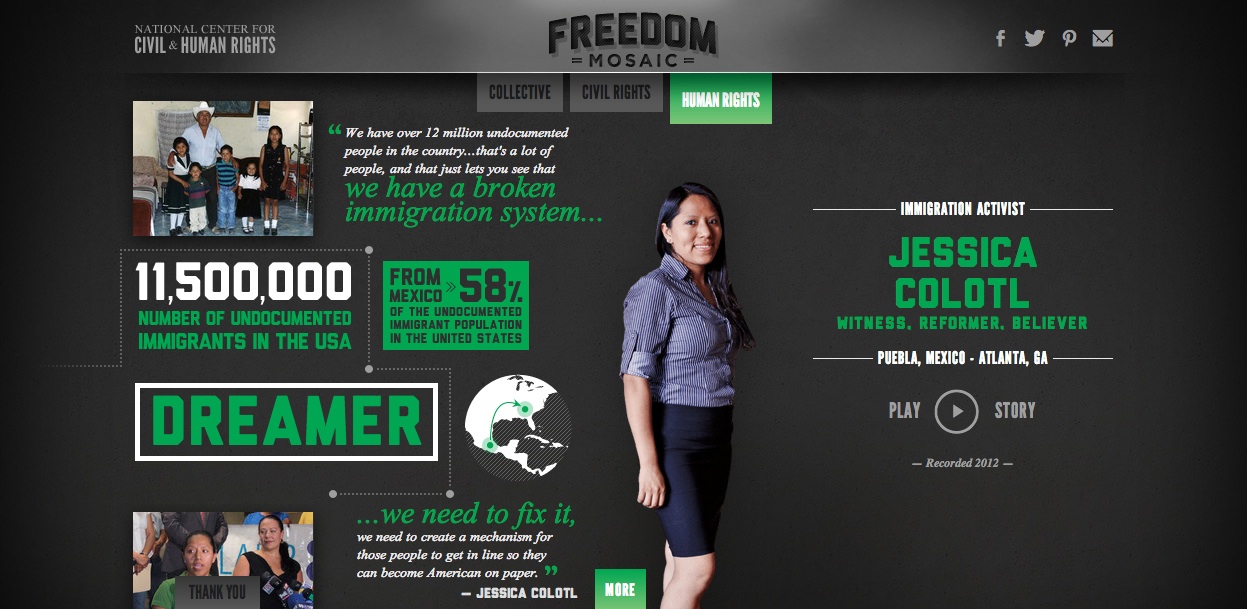

In November 2012, a thread appeared on the H-Net Oral history listserv with the enticing subject line “experimental uses of oral history.” Amid assorted student projects and artistic explorations, two projects in particular caught my eye: the VOCES Oral History Project and the Freedom Mosaic. As we work towards our upcoming special issue on Oral History in the Digital Age, I’ve been mulling over oral historians negotiate online spaces, and how the Internet and related advancing technologies can inspire, but also challenge the manner in which they share their scholarship. I believe the VOCES Oral History Project and the Freedom Mosaic offer two distinct paths historians may take in carving out online space, and raise an interesting issue regarding content versus aesthetics.

Based out of the University of Texas at Austin, the VOCES Oral History Project (previously the US Latino & Latina WWII Oral History Project) launched the spring of 1999 in response to the dearth of Latin@s experiences in WWII scholarship. Since its inception, the project has conducted over 500 interviews, which have spawned a variety of media, from mini-documentaries to the play Voices of Valor by James E. Garcia. My personal favorite is the Narratives Newspaper (1999-2004), a bi-annually printed collection of stories written by journalism students, based on interviews conducted with the WWII veterans. Thanks to a grant from the Institute for Museum and Library Services, in 2009 VOCES expanded its project to include the Vietnam and Korean Wars — hence the name change.

Based out of the University of Texas at Austin, the VOCES Oral History Project (previously the US Latino & Latina WWII Oral History Project) launched the spring of 1999 in response to the dearth of Latin@s experiences in WWII scholarship. Since its inception, the project has conducted over 500 interviews, which have spawned a variety of media, from mini-documentaries to the play Voices of Valor by James E. Garcia. My personal favorite is the Narratives Newspaper (1999-2004), a bi-annually printed collection of stories written by journalism students, based on interviews conducted with the WWII veterans. Thanks to a grant from the Institute for Museum and Library Services, in 2009 VOCES expanded its project to include the Vietnam and Korean Wars — hence the name change.

VOCES does not have the most technologically innovative or visually attractive website; it relies on text and links much more than contemporary web design generally allows. However, it still serves as a strong, online base for the project, allowing the staff to maintain and occasionally expand the project, and facilitates greater access to the public. For instance, VOCES has transferred the Narratives Newspaper stories into an indexed, online archive, one which they continue to populate with new reports featuring personal photos. The website also helps to sustain the project by inviting the public to participate, encouraging them to sign up for VOCES’ volunteer database or conduct their own interviews. They continue to offer a print subscription to a biannual Narratives Newsletter, which speaks to the manner in which they straddle the line between print and digital. It’s understandable given their audience (i.e. war veterans), yet I wonder if it also hinders a full transition into the digital realm.

The second project that intrigued me was the Freedom Mosaic, a collaboration between the National Center for Civil and Human Rights, CNN and the Ford Foundation to share “individual stories that changed history” from major civil and human rights movements. The Freedom Mosaic is a professionally-designed “microsite” that opens with an attractive, interactive interface made up interviewees’ pictures, which viewers can click on to access multimedia profiles of civil and human rights participants. Each profile includes something like a player’s card for the interviewee on the left side of the page — imagine a title like, “Visionary”, a full body picture, an inspiring quote, personal photos, and perhaps a map. On the right side, viewers can click “Play Story” to watch a mini-documentary on the subject, including interview clips. Viewers can also click the tab “More” at the bottom to bring up a brief text biography, additional interviews and interview transcripts.

The Freedom Mosaic is not a standard oral history project. According to Dr. Clifford Kuhn, who served as the consulting historian and interviewer, and introduced the site to H-NetOralHist listserv, “The idea was to develop a dynamic web site that departed from many of the archivally-oriented civil rights-themes web sites, in an attempt to especially appeal to younger users, roughly 15-30 years old.” On one hand, I greatly approve of beautifully designed projects that trick the innocent Internet explorer into learning something — and I think the Freedom Mosaic could succeed. However, I’m bothered by the site’s dearth of… history. Especially when sharing contemporary stories like that of immigration activist Jessica Colotl, I would have liked a bit more background on immigration in the United States than her brief bio provides.

The Freedom Mosaic is not a standard oral history project. According to Dr. Clifford Kuhn, who served as the consulting historian and interviewer, and introduced the site to H-NetOralHist listserv, “The idea was to develop a dynamic web site that departed from many of the archivally-oriented civil rights-themes web sites, in an attempt to especially appeal to younger users, roughly 15-30 years old.” On one hand, I greatly approve of beautifully designed projects that trick the innocent Internet explorer into learning something — and I think the Freedom Mosaic could succeed. However, I’m bothered by the site’s dearth of… history. Especially when sharing contemporary stories like that of immigration activist Jessica Colotl, I would have liked a bit more background on immigration in the United States than her brief bio provides.

VOCES and the Freedom Mosaic are excellent examples of how historians may establish a space online, amid the cat memes and indie movie blogs, for academic research. While I have my concerns, I believe both sites succeed in fulfilling their projects’ respective goals, whether that be archiving or eye-catching. However, I would ask you, lovely readers: Is there a template between VOCES’ text heavy archive and the Freedom Mosaic’s dazzling pentagons that might better serve in sharing oral history? What would a rubric for “successful oral history sites” look like? Or should every site be tailor fit to each project?

To the comments! The more you share, the better the eventual OHR website will look.

Caitlin Tyler-Richards is the editorial/ media assistant at the Oral History Review. When not sharing profound witticisms at @OralHistReview, Caitlin pursues a PhD in African History at the University of Wisconsin-Madison. Her research revolves around the intersection of West African history, literature and identity construction, as well as a fledgling interest in digital humanities. Before coming to Madison, Caitlin worked for the Lannan Center for Poetics and Social Practice at Georgetown University.

The Oral History Review, published by the Oral History Association, is the U.S. journal of record for the theory and practice of oral history. Its primary mission is to explore the nature and significance of oral history and advance understanding of the field among scholars, educators, practitioners, and the general public. Follow them on Twitter at @oralhistreview and like them on Facebook to preview the latest from the Review, learn about other oral history projects, connect with oral history centers across the world, and discover topics that you may have thought were even remotely connected to the study of oral history. Keep an eye out for upcoming posts on the OUPblog for addendum to past articles, interviews with scholars in oral history and related fields, and fieldnotes on conferences, workshops, etc.

Subscribe to the OUPblog via email or RSS.

Subscribe to only history articles on the OUPblog via email or RSS.

The post Oral historians and online spaces appeared first on OUPblog.

By: Alice,

on 1/30/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

History,

peace,

war,

US,

Iraq,

Current Affairs,

Afghanistan,

Barack Obama,

George Bush,

Chuck Hagel,

John Kerry,

hagel,

*Featured,

Law & Politics,

American Presidency at War,

Elusive Victories,

Hamid Karzai,

national security cabinet,

non-interventionism,

Add a tag

By Andrew J. Polsky

The signs are clear. President Barack Obama has nominated two leading skeptics of American military intervention for the most important national security cabinet posts. Meeting with Afghan President Hamid Karzai, who would prefer a substantial American residual presence after the last American combat troops have departed in 2014, Obama has signaled that he wants a more rapid transition out of an active combat role (perhaps as soon as this spring, rather than during the summer). The president has also countered a push from his own military advisors to keep a sizable force in Afghanistan indefinitely by agreeing to consider the “zero option” of a complete withdrawal. We appear on the verge of a non-interventionist moment in American politics, when leaders and the general public alike shun major military actions.

Only a decade ago, George W. Bush stood before the graduating class at West Point to proclaim the dawn of a new era in American security policy. Neither deterrence nor containment, he declared, sufficed to deal with the threat posed by “shadowy terrorist networks with no nation or citizens to defend” or with “unbalanced dictators” possessing weapons of mass destruction. “[O]ur security will require all Americans to be forward looking and resolute, to be ready for preemptive action when necessary to defend our liberty and to defend our lives.” This new “Bush Doctrine” would soon be put into effect. In March 2003, the president ordered the US military to invade Iraq to remove one of those “unstable dictators,” Saddam Hussein.

This post-9/11 sense of assertiveness did not last. Long and costly wars in Iraq and Afghanistan discredited the leaders responsible and curbed any popular taste for military intervention on demand. Over the past two years, these reservations have become obvious as other situations arose that might have invited the use of troops just a few years earlier: Obama intervened in Libya but refused to send ground forces; the administration has rejected direct measures in the Syrian civil war such as no-fly zones; and the president refused to be stampeded into bombing Iranian nuclear facilities.

The reaction against frustrating wars follows a familiar historical pattern. In the aftermath of both the Korean War and the Vietnam War, Americans expressed a similar reluctance about military intervention. Soon after the 1953 truce that ended the Korean stalemate, the Eisenhower administration faced the prospect of intervention in Indochina, to forestall the collapse of the French position with the pending Viet Minh victory at Dien Bien Phu. As related by Fredrik Logevall in his excellent recent book, Embers of War, both Eisenhower and Secretary of State John Foster Dulles were fully prepared to deploy American troops. But they realized that in the backwash from Korea neither the American people nor Congress would countenance unilateral action. Congressional leaders indicated that allies, the British in particular, would need to participate. Unable to secure agreement from British foreign secretary Anthony Eden, Eisenhower and Dulles were thwarted, and decided instead to throw their support behind the new South Vietnamese regime of Ngo Dinh Diem.

Another period marked by reluctance to use force followed the Vietnam War. Once the last American troops withdrew in 1973, Congress rejected the possibility they might return, banning intervention in Indochina without explicit legislative approval. Congress also adopted the War Powers Resolution, more significant as a symbolic statement about the wish to avoid being drawn into a protracted military conflict by presidential initiative than as a practical measure to curb presidential bellicosity.

It is no coincidence that Obama’s key foreign and defense policy nominees were shaped by the crucible of Vietnam. Both Chuck Hagel and John Kerry fought in that war and came away with “the same sensibility about the futilities of war.” Their outlook contrasts sharply with that of Obama’s initial first-term selections to run the State Department and the Pentagon: both Hillary Clinton and Robert Gates backed an increased commitment of troops in Afghanistan in 2009. Although Senators Hagel and Kerry supported the 2002 congressional resolution authorizing the use of force in Iraq, they became early critics of the war. Hagel has expressed doubts about retaining American troops in Afghanistan or using force against Iran.

Given the present climate, we are unlikely to see a major American military commitment during the next several years. Obama’s choice of Kerry and Hagel reflect his view that, as he put it in the 2012 presidential debate about foreign policy, the time has come for nation-building at home. It will suffice in the short run to hold distant threats at bay. Insofar as possible, the United States will rely on economic sanctions and “light footprint” methods such as drone strikes on suspected terrorists.

If the past is any guide, however, this non-interventionist moment won’t last. The post-Korea and post-Vietnam interludes of reluctance gave way within a decade to a renewed willingness to send American troops into combat. By the mid-1960s, Lyndon Johnson had embraced escalation in Vietnam; Ronald Reagan made his statement through his over-hyped invasion of Grenada to crush its pipsqueak revolutionary regime. The American people backed both decisions.

The return to interventionism will recur because the underlying conditions that invite it have not changed significantly. In the global order, the United States remains the hegemonic power that seeks to preserve stability. We retain a military that is more powerful by several orders of magnitude than any other, and will surely remain so even after the anticipate reductions in defense spending. Psychologically, the American people have long been sensitive to distant threats, and we have shown that we can be stampeded into endorsing military action when a president identifies a danger to our security. (And presidents themselves become vulnerable to charges that they are tolerating American decline whenever a hostile regime comes to power anywhere in the world.)

Those of us who question the American proclivity to resort to the use of force, then, should enjoy the moment.

Andrew Polsky is Professor of Political Science at Hunter College and the CUNY Graduate Center. A former editor of the journal Polity, his most recent book is Elusive Victories: The American Presidency at War. Read Andrew Polsky’s previous blog posts.

Andrew Polsky is Professor of Political Science at Hunter College and the CUNY Graduate Center. A former editor of the journal Polity, his most recent book is Elusive Victories: The American Presidency at War. Read Andrew Polsky’s previous blog posts.

Subscribe to the OUPblog via email or RSS.

Subscribe to only law and politics articles on the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

The post The non-interventionist moment appeared first on OUPblog.

By: Alice,

on 1/30/2013

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

civil war,

illness,

African American Studies,

suffering,

refugee,

African American History,

emancipation proclamation,

reconstruction,

*Featured,

contraband,

Online Products,

Oxford African American Studies Center,

Oxford AASC,

Tim Allen,

freedmen,

Freedmen's Bureau,

health crisis,

Jim Downs,

Sick from Freedom,

freedpeople,

History,

US,

Add a tag

The editors of the Oxford African American Studies Center spoke to Professor Jim Downs, author of Sick From Freedom: African-American Illness and Suffering during the Civil War and Reconstruction, about the legacy of the Emancipation Proclamation 150 years after it was first issued. We discuss the health crisis that affected so many freedpeople after emancipation, current views of the Emancipation Proclamation, and insights into the public health crises of today.

Emancipation was problematic, indeed disastrous, for so many freedpeople, particularly in terms of their health. What was the connection between newfound freedom and health?

I would not say that emancipation was problematic; it was a critical and necessary step in ending slavery. I would first argue that emancipation was not an ending point but part of a protracted process that began with the collapse of slavery. By examining freedpeople’s health conditions, we can see how that process unfolded—we can see how enslaved people liberated themselves from the shackles of Southern plantations but then were confronted with a number of questions: How would they survive? Where would they get their next meal? Where were they to live? How would they survive in a country torn apart by war and disease?

Due to the fact that freedpeople lacked many of these basic necessities, hundreds of thousands of former slaves became sick and died.

The traditional narrative of emancipation begins with liberation from slavery in 1862-63 and follows freedpeople returning to Southern plantations after the war for employment in 1865 and then culminates with grassroots political mobilization that led to the Reconstruction Amendments in the late 1860s. This story places formal politics as the central organizing principle in the destruction of slavery and the movement toward citizenship without considering the realities of freedpeople’s lives during this seven- to eight- year period. By investigating freedpeople’s health conditions, we first notice that many formerly enslaved people died during this period and did not live to see the amendments that granted citizenship and suffrage. They survived slavery but perished during emancipation—a fact that few historians have considered. Additionally, for those that did survive both slavery and emancipation, it was not such a triumphant story; without food, clothing, shelter, and medicine, emancipation unleashed a number of insurmountable challenges for the newly freed.

Was the health crisis that befell freedpeople after emancipation any person, government, or organization’s fault? Was the lack of a sufficient social support system a product of ignorance or, rather, a lack of concern?

The health crises that befell freedpeople after emancipation resulted largely from the mere fact that no one considered how freedpeople would survive the war and emancipation; no one was prepared for the human realities of emancipation. Congress and the President focused on the political question that emancipation raised: what was the status of formerly enslaved people in the Republic?

When the federal government did consider freedpeople’s condition in the final years of the war, they thought the solution was to simply return freedpeople to Southern plantations as laborers. Yet, no one in Washington thought through the process of agricultural production: Where was the fertile land? (Much of it was destroyed during the war; and countless acres were depleted before the war, which was why Southern planters wanted to move west.) How long would crops grow? How would freedpeople survive in the meantime?

Meanwhile, a drought erupted in the immediate aftermath of the war that thwarted even the most earnest attempts to develop a free labor economy in the South. Therefore, as a historian, I am less invested in arguing that someone is at fault, and more committed to understanding the various economic and political forces that led to the outbreak of sickness and suffering. Creating a new economic system in the South required time and planning; it could not be accomplished simply by sending freedpeople back to Southern plantations and farms. And in the interim of this process, which seemed like a good plan by federal leaders in Washington, a different reality unfolded on the ground in the postwar South. Land and labor did not offer an immediate panacea to the war’s destruction, the process of emancipation, and the ultimate rebuilding of the South. Consequently, freedpeople suffered during this period.

When the federal government did establish the Medical Division of the Freedmen’s Bureau – an agency that established over 40 hospitals in the South, employed over 120 physicians, and treated an estimated one million freedpeople — the institution often lacked the finances, personnel, and resources to stop the spread of disease. In sum, the government did not create this division with a humanitarian — or to use 19th century parlance, “benevolence” — mission, but rather designed this institution with the hope of creating a healthy labor force.