Viewing: Blog Posts Tagged with: Politics, Most Recent at Top [Help]

Results 1 - 25 of 1,559

Blog: E is for Erik (Login to Add to MyJacketFlap)

JacketFlap tags: polar bears, kaktovik alaska, presidential polar bear post card project, politics, Add a tag

Blog: E is for Erik (Login to Add to MyJacketFlap)

JacketFlap tags: Harts Pass, election 2016, politics, Add a tag

Snow job: (noun) definition - a strong effort to make someone believe something by saying things that are not true or sincere.

Blog: E is for Erik (Login to Add to MyJacketFlap)

JacketFlap tags: advocacy, politics, polar bear, presidential polar bear post card project, Add a tag

Blog: The Mumpsimus (Login to Add to MyJacketFlap)

JacketFlap tags: politics, academia, Add a tag

Hopefully, someday my contribution to peace

Will help just a bit to turn the tide

And perhaps I can tell my children six

And later on their own children

That at least in the future they need not be silent

When they are asked, "Where was your mother, when?"

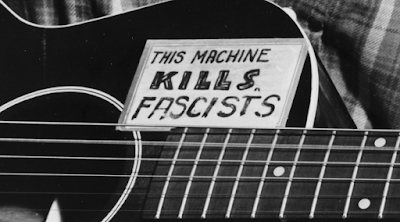

—Pete Seeger, "My Name Is Lisa Kalvelage"Faculty and grad students at my university are being targeted by right-wing groups who publicize their names and contact information because these faculty and students have criticized racist and sexist acts on campus. The Women's Studies department in particular has been attacked in the state newspaper for the crime of offering supplies to students who were participating in a protest against Donald Trump. The president of our university just sent out an email giving staff and students information about what to do if they are attacked. Numerous students have reported being harassed, spat upon, told they'd be deported, etc.

The right wing detests many segments of academia. The basic idea of women's studies programs, ethnic studies programs, queer studies programs, etc. are anathema to them, but right-wing vitriol is not limited to the humanities — ask a climate scientist what life is like these days.

These trends are not new, but they are emboldened and concentrated by the success of Donald Trump and the nazis, klansmen, and various troglodytes associated with him. Hate crimes are on the rise. The media, trapped in the ideology of false equivalence, terrified of losing access to people in power, besotted by celebrity, makes white supremacists look like GQ models and ends up running headlines questioning if Jews are people. Things will only get worse.

The chilling effect is already strong.

Within the last few days, I've heard from a number of academics (some with tenure) who say they are being very careful. They're changing their social media habits (in some cases, deleting their social media accounts altogether), making themselves less accessible, being careful not to show any political partiality around their students. They need their jobs, after all. They have bills to pay, kids to support, lives to live. Just yesterday, one of my friends was ordered to a meeting with a dean to justify a Tweet from her personal account, a Tweet I had to read three times before I could figure out what in it might ever be construed as "misrepresenting the university". She's got tenure, at least, so she might be safe for now. For now.

This is not to say that anti-Trump or left-leaning faculty ought to be celebrated as always correct and perfect. They're as capable of being incoherent, punitive, and authoritarian in their views as Trump is, and a few of the things some of my friends and colleagues said are things I completely disagree with. But they're human beings at a highly anxious moment expressing their views. They're not sending hate mail or harassing people, yet now they are targets of hate and harassment campaigns and many have pulled back, hidden, or deleted whatever they could of their public presence. These are wonderful people, teachers, and scholars. We need to hear their voices. We need them not to be strangled by fear. But they're scared.

Most teaching faculty in the U.S. don't have tenure, and tenure is often weak. The neoliberalization of academia has seen to that, and there's little reason to believe things will get better. The Wisconsin model is one the Republicans hope to make national, and with control of all levels of federal government and a majority of state governments, that goal is within their reach.

The series of moral panics that have been spreading through academia — and spreading even more in the discourse around and about academia — is effective in its work, and it is especially effective now in the Age of Trump. "Political correctness" is a powerful term within the discourse, one that the right-wing uses skillfully to stifle dissent and to create a new common sense that is more favorable to the right's perspective. State universities like mine are especially vulnerable to the interference of politicians, even though at this point we get so little funding from the state that the term barely applies anymore. The triumph of the right wingers means institutions susceptible to political pressure will adapt to the new rulers.

Moves toward fascism may seem inimical to academia, but they're not at all. Universities can adapt.

I don't have any original or even particularly insightful answers to this stuff. I've been reading lots of Stuart Hall, Audre Lorde, and Victor Klemperer. I sent money to organizations committed to standing against Trump and fascism. I'm matching my publisher's fundraiser for the ACLU. I'm adding Parable of the Sower to the readings in my First-Year Composition course in the spring. I stuck a pink triangle pin on my backpack; I hadn't done so for many years, but it feels important now to be visible.

I've got a lot less to lose than many of my friends and colleagues. (I'm a white male for one thing. White supremacist patriarchy lets us get away with more.) I'll protest, I'll speak out, I'll risk what I can see my way to risk.

Back in the good olde days of George W. Bush, I was a columnist for a local newspaper. My assignment was to be the resident left-winger. My first column ran just before the attacks of September 11, 2001. In March of 2002, I began a column with the sentence, "If George W. Bush really wants to clean up politics in this country, the best thing he could do would be to resign." I was a young teacher at a private high school, and readers of the newspaper called the headmaster to try to get me fired. He liked me and had no desire to fire me. He encouraged people who called him to give me a call (my phone number was public), since, he told them, I'm a reasonable guy. "Not one of them would," he said to me a week or so later. "They're cowards. And good for you for speaking up. You and I don't agree about everything, but so what? Why would I ever want to have a teacher here who wasn't truthful to himself? Write what you need to write." A couple readers wrote relatively polite letters to the editor denouncing me for my lack of patriotism. (Now they'd just send an email in ALL CAPS and filled with abusive epithets and maybe a couple death threats for good measure. Those were simpler days...)

I kept at it for a while, and indeed eventually got a few phone calls, and now and then somebody wrote to the school to complain about a person with such unpatriotic and subversive ideas teaching the young and impressionable children, and once a deacon came to the school to speak with me between classes because he thought I needed God. I wasn't especially attached to writing those columns; it was just something that seemed like it needed doing, and I didn't have many other writing opportunities at the time. Eventually, I gave it up for blogging.

I don't think my words changed anybody's minds, I don't think I particularly helped anybody through writing those columns, and I am certain that I could have done more, risked more, donated more, written more. But I look back on it all now with some pride, because lots of people who later said they didn't support the catastrophic U.S. wars in Iraq and Afghanistan really did at the time. It's easy to forget now how much cultural pressure there was after 9/11 to toe the line, to keep your radical ideas to yourself, to not make waves, not buck the trends or upset the ship of state. People said we had to support the President even if we didn't agree with him. People said we needed to be united, even if it meant being united around murder, destruction, and hatred. Some of us disagreed, and the proof of my own stand remains in the pages of the Record Enterprise of Plymouth, NH fifteen years ago.

There are many forces in my life right now saying it would be prudent for me not to associate my own name with seemingly radical beliefs or actions. I don't agree. I will not support Donald Trump or his minions. I will continue to compare them to Hitler and the Nazis as long as their actions and words continue to evoke fascism. I will continue to advocate for an academia that seeks ways to shed the neoliberalism that has so corrupted the academic mission, and I will stand with students and faculty and staff who are marginalized, oppressed, precarious. Solidarity forever.

I will do my damnednest not to risk collaboration with oppression. As an American citizen, it's impossible not to have some complicity in the wars and weaponry our taxes pay for, or in the many ways our lifestyles destroy the biosphere, or — well, the list of atrocities we contribute to is long, and the whole country was founded on the sins of genocide and slavery, sins still repercussing at the present moment. I know this. I'm not seeking to hide the double/triple/infinite binds except to say there are some things we do not need to go along with, even as deep in the swamp of complicity as we may be.

It's important to pay attention to attacks and to chilling effects, important to make your own position known, to try hard not to collaborate with a regime of repression. We do what we can, and protect what we must. Some people can only resist quietly. Others will put their lives on the line. What matters is the resistance.

What matters is being able to stand tall and answer honorably when the future asks, "Where were you, when...?"

|

| Standing Rock |

Blog: E is for Erik (Login to Add to MyJacketFlap)

JacketFlap tags: Harts Pass, election 2016, politics, Add a tag

Blog: The Mumpsimus (Login to Add to MyJacketFlap)

JacketFlap tags: Blood: Stories, Black Lawrence Press, politics, ACLU, Add a tag

My publisher, Black Lawrence Press, has announced that for every book they sell through their website from now through the end of the year, they will donate $1 to the American Civil Liberties Union.

Blog: The Mumpsimus (Login to Add to MyJacketFlap)

JacketFlap tags: New York Times, history, politics, World War II, newspapers, Hitler, Nazis, Add a tag

In the archives of the New York Times, materials about Germany and the rise of the Nazis to power are vast. It would take days to read through it all. Though it would be an informative experience, I don't have the time to do so at the moment, but I was curious to see the general progression of news and opinion as it all happened.

Here are a few items that stuck out to me as I skimmed around:

1932

7 February

12 June

Blog: TWO WRITING TEACHERS (Login to Add to MyJacketFlap)

JacketFlap tags: community, politics, current events, Add a tag

Blog: E is for Erik (Login to Add to MyJacketFlap)

JacketFlap tags: politics, Harts Pass, elections 2016, Add a tag

Blog: E is for Erik (Login to Add to MyJacketFlap)

JacketFlap tags: halloween, politics, Harts Pass, Add a tag

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: History, Politics, Fidel Castro, America, nuclear war, cold war, Soviet Union, CIA, john f. kennedy, Cuban Missile Crisis, OBO, *Featured, Nikita Khrushchev, Online products, Oxford Bibliographies, Bay of Pigs, Cuban-American relations, jonathan colman, October 1962, Add a tag

The Cuban Missile Crisis was a six-day public confrontation in October 1962 between the United States and the Soviet Union over the presence of Soviet strategic nuclear missiles in Cuba. It ended when the Soviets agreed to remove the weapons in return for a US agreement not to invade Cuba and a secret assurance that American missiles in Turkey would be withdrawn. The confrontation stemmed from the ideological rivalries of the Cold War.

The post The Cuban missile crisis appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: Books, Sociology, Politics, australia, Social Sciences, *Featured, Business & Economics, public servant, british colonies, bureaucracy, Only in Australia, The History Politics and Economics of Australian Exceptionalism, William Coleman, Australian Bureau of Statistics, Battle of Yorktown, colonial regime, Nelson T. Johnson, New World society, officialdom, Add a tag

‘Public Servant’ — in the sense of ‘government employee’ — is a term that originated in the earliest days of the European settlement of Australia. This coinage is surely emblematic of how large bureaucracy looms in Australia. Bureaucracy, it has been well said, is Australia’s great ‘talent,’ and “the gift is exercised on a massive scale” (Australian Democracy, A.F. Davies 1958). This may surprise you. It surprises visitors, and excruciates them.

The post Australia in three words, part 3 — “Public servant” appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: global issues, millennium development goals, Social Sciences, *Featured, religious fundamentalism, Online products, world politics, refugee crisis, 2008 financial crisis, brexit, A Dictionary of Contemporary World History, Christopher Riches, History, Politics, global warming, World, civil war, Sudan, Syria, nationalism, catalonia, oxford reference online, Add a tag

Over the past 30 years, I have worked on many reference books, and so am no stranger to recording change. However, the pace of change seems to have become more frantic in the second decade of this century. Why might this be? One reason, of course, is that, with 24-hour news and the internet, information is transmitted at great speed. Nearly every country has online news sites which give an indication of the issues of political importance.

The post Why is the world changing so fast? appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: What Everyone Needs to Know, sexual assault, *Featured, student protests, WENTK, racial inequality, campus politics, Chief Diversity Officer, Jonathan Zimmerman, political protests, undergraduate education, university administrators, university policies, Books, diversity, Education, Politics, Racism, social change, university, affirmative action, Add a tag

Like their forebears in the 1960s, today’s students blasted university leaders as slick mouthpieces who cared more about their reputations than about the people in their charge. But unlike their predecessors, these protesters demand more administrative control over university affairs, not less. That’s a childlike position. It’s time for them to take control of their future, instead of waiting for administrators to shape it.

The post How university students infantilise themselves appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: africa, Journals, Politics, ghana, Social Sciences, *Featured, African Affairs, African scholars, journal publishing, scholarly publishing, academic scholarship, african scholarship, Africa-based scholars, African Research Universities Alliance, Peace A. Medie, Peace Medie, Add a tag

A quick scan of issues of the most highly-ranked African studies journals published within the past year will reveal only a handful of articles published by Africa-based authors. The results would not be any better in other fields of study. This under representation of scholars from the continent has led to calls for changes in African universities, with a focus on capacity building. The barriers to research and publication in most public universities in Africa are many.

The post Engendering debate and collaboration in African universities appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: Books, Politics, European Union, Social Sciences, *Featured, Business & Economics, brexit, Theresa May, EU referendum, Michelle Cini, Nieves Pérez-Solórzano Borragán, Article 50, European Single Market, Bilateral agreements, European Economic Area, European Free Trade Association, European Union Politics, Léo Wilkinson, the norway model, UK Referendum, Add a tag

The UK’s vote to leave the EU has resulted in a tremendous amount of uncertainty regarding the UK’s future relationship with the EU. Yet, predicting what type of new relationship the UK will have with the EU and its 27 other Member States post-‘Brexit’ is very difficult, mainly because it is the first time an EU member state prepares to leave. We can expect either one, or a mixture, of the following options.

The post Brexit: the UK’s different options appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: David Haven Blake, Books, History, Politics, cake, recipe, America, cake recipe, *Featured, re-election campaign, Arts & Humanities, Liking Ike, Eisenhower birthday, Eisenhower re-election, Ike Day, Ike Day activities, Mamie Eisenhower, Mrs. Eisenhower, President Dwight D. Eisenhower, President Eisenhower, Add a tag

On this day, sixty years ago, Republicans celebrated President Dwight D. Eisenhower’s upcoming birthday with a star-studded televised tribute on CBS. As part of his re-election campaign, Ike Day was a nationwide celebration of Ike: communities held dinners and parades, there were special halftime shows at college football games, and volunteers collected thousands of signatures from citizens pledging to vote.

The post Cake recipe from Ike Day celebrations appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: Books, Politics, interest groups, government policy, Social Sciences, *Featured, decision making, Business & Economics, policymakers, Transatlantic Trade and Investment Partnership, labour unions, Democratic politics, Andreas Dür, business associations, citizen groups, Insiders versus Outsiders, Interest Group Politics in Multilevel Europe, political systems, professional associations, The Confederation of British Industry, Add a tag

Virtually no government policy gets enacted without some organized societal interests trying to shape the outcome. In fact, interest groups – a term that encompasses such diverse actors as business associations, labour unions, professional associations, and citizen groups that defend broad interests such as environmental protection or development aid – are active at each stage of the policy cycle.

The post 5 things you always wanted to know about interest groups appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: Law, UK, Journals, politics, environment, pokemon, EU, *Featured, international law, UK law, Duncan French, JEL, Journal of Environmental Law, brexit, EU referendum, Pokemon Go, Add a tag

I may not have understood the allure of capturing Pokémon (...) but I hope I am not so trenchant as to run around in the hope of spotting something even rarer; UK membership of the EU as it existed prior to 23 June 2016. That truly is becoming an alternate reality.

The post Alternate realities: Brexit and Pokémon appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: Politics, Hilary clinton, Winston Churchill, leadership, Dwight Eisenhower, Donald Trump, Tony Abbott, Oxford Reference, Margaret Thatcher, US Presidents, Social Sciences, President Kennedy, *Featured, Online products, Quizzes & Polls, British Prime Ministers, world leaders, 2016 Presidential Campaign, Oxford Essential Quotations, Twiplomacy, Add a tag

In today’s globalised and instantly shareable social-media world, heads of state have to watch what they say, just as much – and perhaps even more so – than what they actually do. The rise of ‘Twiplomacy’ and the recent war of sound bites between Donald Trump and Hilary Clinton speak to this ever-increasing trend. With these witty refrains in mind, test your knowledge of world leaders and their retorts – do you know who said what?

The post How well do you know your world leaders? [quiz] appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: History, Politics, Language, Social Networking, social media, America, oxford english dictionary, enlightenment, twitter, Tweets, english language, *Featured, presidential candidates, american politics, Online products, digital technology, public officials, The Independent Reflector, William Livingston, Add a tag

A New Yorker once declared that “Twitter” had “struck Terror into a whole Hierarchy.” He had no computer, no cellphone, and no online social media following. He was not a presidential candidate, but he would go on to sign the Constitution of the United States. So who was he? And what did he mean by “Twitter”?

The post Twitter and the Enlightenment in early America appeared first on OUPblog.

Blog: E is for Erik (Login to Add to MyJacketFlap)

JacketFlap tags: politics, Harts Pass, GRRR!, Add a tag

It goes without saying what inspired this strip-- but I do feel pretty bad about ascribing any such attitude or characteristic to those of the animal persuasion...

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: Social Sciences, *Featured, Business & Economics, world politics, British economy, brexit, Theresa May, EU referendum, Article 50, An Introduction to International Relations, The Globalization of World Politics, European Single Market, Matthew Watson, Books, Politics, Finance, europe, European Union, david cameron, international relations, Add a tag

David Cameron famously got precious little from his pre-referendum attempts to negotiate a special position for the UK in relation to existing EU treaty obligations. This was despite almost certainly having held many more cards back then than UK negotiators will do when Article 50 is eventually invoked. In particular, he was still able to threaten that he would lead the Out campaign if he did not get what he wanted, whereas now that the vote to leave has happened that argument has been entirely neutralised.

The post Brexit and Article 50 negotiations: why the smart money might be on no deal appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: Books, History, Religion, Politics, India, pakistan, middle east, Islam, Asia, taliban, Moses, osama bin laden, Muslin, jihad, islamic history, qur'an, *Featured, Pakistani Taliban, Dr. Mona Kanwal Sheikh, drone attacks, Faith Militant, jihadi, middle eastern history, muslim counrties, pakistan history, Add a tag

All simplistic hypothesis about “what drives terrorists” falter when there is suddenly in front of you human faces and complex life stories. The tragedy of contemporary policies designed to handle or rather crush movements who employ terrorist tactics, are prone to embrace a singular explanation of the terrorist motivation, disregarding the fact that people can be in the very same movement for various reasons.

The post The different faces of Taliban jihad in Pakistan appeared first on OUPblog.

Blog: OUPblog (Login to Add to MyJacketFlap)

JacketFlap tags: Books, Politics, Hillary Clinton, Republican party, Donald Trump, Social Sciences, *Featured, Business & Economics, Richard Grossman, US economy, Economic Policy with Richard S. Grossman, economic policy, economic policy with richard grossman, wrong: nine economic policy disasters and what we can learn from them, 2016 Presidential Campaign, Greg Mankiw, Martin Feldstein, White House Council of Economic Advisors, Add a tag

A few weeks ago, I received an e-mail inviting me to sign a statement drafted by a group calling itself “Economists Concerned by Hillary Clinton’s Economic Agenda.” The statement, a vaguely worded five paragraph denunciation of Democratic policies (and proposed policies) is unremarkable — as are the authors, a collection of reliably conservative policy makers and commentators whose support for Donald Trump appear with some regularity in the media.

The post One concerned economist appeared first on OUPblog.

View Next 25 Posts