new posts in all blogs

Viewing: Blog Posts Tagged with: computing, Most Recent at Top [Help]

Results 1 - 6 of 6

How to use this Page

You are viewing the most recent posts tagged with the words: computing in the JacketFlap blog reader. What is a tag? Think of a tag as a keyword or category label. Tags can both help you find posts on JacketFlap.com as well as provide an easy way for you to "remember" and classify posts for later recall. Try adding a tag yourself by clicking "Add a tag" below a post's header. Scroll down through the list of Recent Posts in the left column and click on a post title that sounds interesting. You can view all posts from a specific blog by clicking the Blog name in the right column, or you can click a 'More Posts from this Blog' link in any individual post.

By: Helena Palmer,

on 4/15/2016

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

IBM,

computing,

new technology,

Social Sciences,

US economy,

information technology,

Alex Sayf Cummings,

Democracy of Sound,

socioeconomics,

colin clark,

daniel bell,

Information Revolution,

knowledge-based economy,

marshal mcluhan,

Music Piracy and the Remaking of American Copyright in the Twentieth Century,

Technology Industry,

Books,

America,

*Featured,

Business & Economics,

marketing,

Technology,

Add a tag

The idea that the United States economy runs on information is so self-evident and commonly accepted today that it barely merits comment. There was an information revolution. America “stopped making stuff.” Computers changed everything. Everyone knows these things, because of an incessant stream of reinforcement from liberal intellectuals, corporate advertisers, and policymakers who take for granted that the US economy shifted toward an “knowledge-based” economy in the late twentieth century.

The post The invention of the information revolution appeared first on OUPblog.

By: Charley,

on 1/23/2016

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Technology,

Journals,

Justin Richards,

it,

Mathematics,

artificial intelligence,

computer science,

computing,

ai,

cyber security,

*Featured,

Science & Medicine,

information technology,

The Computer Journal,

BCS,

Steve Furber,

The Chartered Institute for IT,

University of Manchester,

Add a tag

Oxford University Press is excited to be welcoming Professor Steve Furber as the new Editor-in-Chief of The Computer Journal. In an interview between Justin Richards of BCS, The Chartered Institute of IT and Steve, we get to know more about the SpiNNaker project, ethical issues around Artificial Intelligence (AI), and the future of the IT industry.

The post Conversations in computing: Q&A with Editor-in-Chief, Professor Steve Furber appeared first on OUPblog.

By: Meredith Sneddon,

on 8/21/2014

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

computing,

cognitive psychology,

*Featured,

Science & Medicine,

Psychology & Neuroscience,

visual processing,

Experimental vision,

Li Zhaoping,

Understanding vision,

Books,

Data,

vision,

Theory,

models,

Add a tag

About half a century ago, an MIT professor set up a summer project for students to write a computer programme that can “see” or interpret objects in photographs. Why not! After all, seeing must be some smart manipulation of image data that can be implemented in an algorithm, and so should be a good practice for smart students. Decades passed, we still have not fully reached the aim of that summer student project, and a worldwide computer vision community has been born.

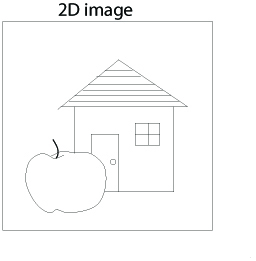

We think of being “smart” as including the intellectual ability to do advanced mathematics, complex computer programming, and similar feats. It was shocking to realise that this is often insufficient for recognising objects such as those in the following image.

Image credit: Fig 5.51 from Li Zhaoping,

Understanding Vision: Theory Models, and Data

Can you devise a computer code to “see” the apple from the black-and-white pixel values? A pre-school child could of course see the apple easily with her brain (using her eyes as cameras), despite lacking advanced maths or programming skills. It turns out that one of the most difficult issues is a chicken-and-egg problem: to see the apple it helps to first pick out the image pixels for this apple, and to pick out these pixels it helps to see the apple first.

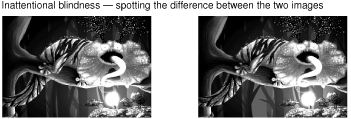

A more recent shocking discovery about vision in our brain is that we are blind to almost everything in front of us. “What? I see things crystal-clearly in front of my eyes!” you may protest. However, can you quickly tell the difference between the following two images?

Image credit: Alyssa Dayan, 2013 Fig. 1.6 from Li Zhaoping

Understanding Vision: Theory Models, and Data. Used with permission

It takes most people more than several seconds to see the (big) difference – but why so long? Our brain gives us the impression that we “have seen everything clearly”, and this impression is consistent with our ignorance of what we do not see. This makes us blind to our own blindness! How we survive in our world given our near-blindness is a long, and as yet incomplete, story, with a cast including powerful mechanisms of attention.

Being “smart” also includes the ability to use our conscious brain to reason and make logical deductions, using familiar rules and past experience. But what if most brain mechanisms for vision are subconscious and do not follow the rules or conform to the experience known to our conscious parts of the brain? Indeed, in humans, most of the brain areas responsible for visual processing are among the furthest from the frontal brain areas most responsible for our conscious thoughts and reasoning. No wonder the two examples above are so counter-intuitive! This explains why the most obvious near-blindness was discovered only a decade ago despite centuries of scientific investigation of vision.

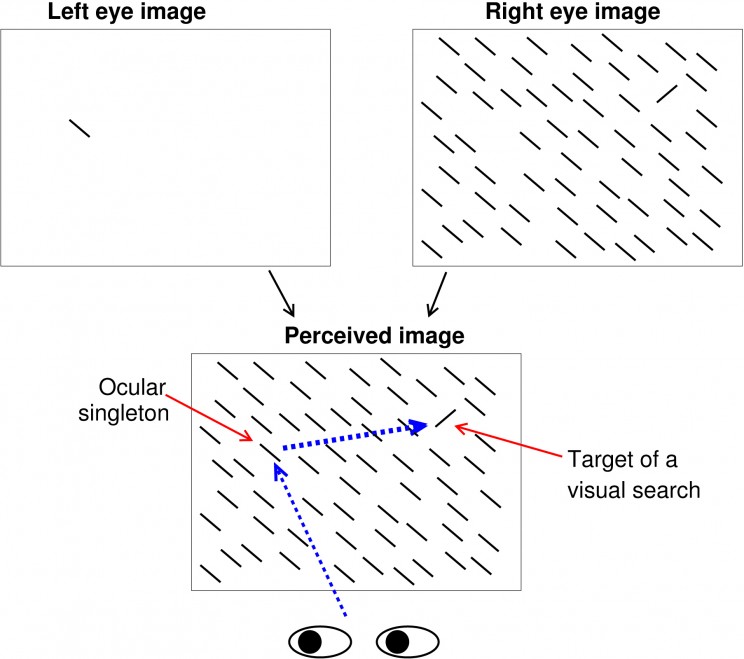

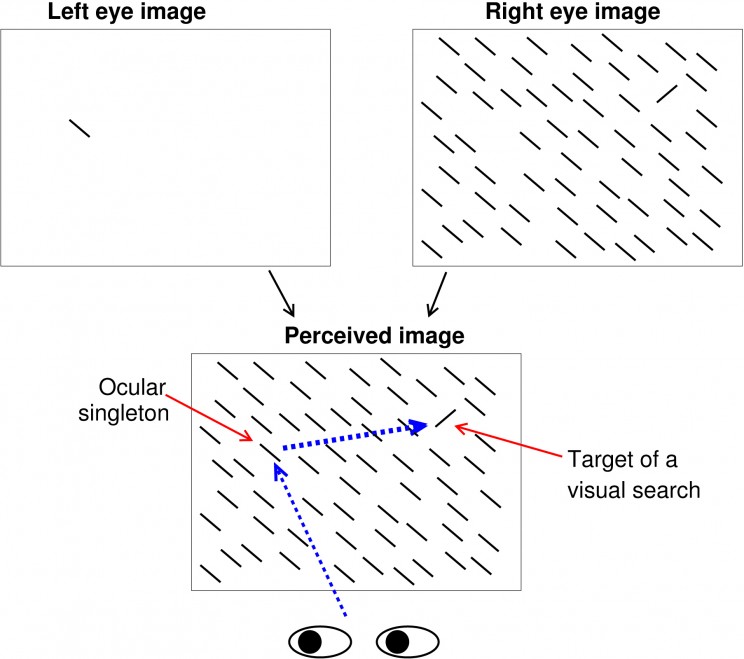

Another counter-intuitive finding, discovered only six years ago, is that our attention or gaze can be attracted by something we are blind to. In our experience, only objects that appear highly distinctive from their surroundings attract our gaze automatically. For example, a lone-red flower in a field of green leaves does so, except if we are colour-blind. Our impression that gaze capture occurs only to highly distinctive features turns out to be wrong. In the following figure, a viewer perceives an image which is a superposition of two images, one shown to each of the two eyes using the equivalent of spectacles for watching 3D movies.

Image credit: Fig 5.9 from Li Zhaoping,

Understanding Vision: Theory Models, and Data

To the viewer, it is as if the perceived image (containing only the bars but not the arrows) is shown simultaneously to both eyes. The uniquely tilted bar appears most distinctive from the background. In contrast, the ocular singleton appears identical to all the other background bars, i.e. we are blind to its distinctiveness. Nevertheless, the ocular singleton often attracts attention more strongly than the orientation singleton (so that the first gaze shift is more frequently directed to the ocular rather than the orientation singleton) even when the viewer is told to find the latter as soon as possible and ignore all distractions. This is as if this ocular singleton is uniquely coloured and distracting like the lone-red flower in a green field, except that we are “colour-blind” to it. Many vision scientists find this hard to believe without experiencing it themselves.

Are these counter-intuitive visual phenomena too alien to our “smart”, intuitive, and conscious brain to comprehend? In studying vision, are we like Earthlings trying to comprehend Martians? Landing on Mars rather than glimpsing it from afar can help the Earthlings. However, are the conscious parts of our brain too “smart” and too partial to “dumb” down suitably to the less conscious parts of our brain? Are we ill-equipped to understand vision because we are such “smart” visual animals possessing too many conscious pre-conceptions about vision? (At least we will be impartial in studying, say, electric sensing in electric fish.) Being aware of our difficulties is the first step to overcoming them – then we can truly be smart rather than smarting at our incompetence.

Headline image credit: Beautiful woman eye with long eyelashes. © RyanKing999 via iStockphoto.

The post Are we too “smart” to understand how we see? appeared first on OUPblog.

By: Alice,

on 11/29/2012

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

great mind,

Jack Copeland,

mathematician,

the turing,

Editor's Picks,

*Featured,

Science & Medicine,

UKpophistory,

B. Jack Copeland,

code-breaker,

World War II,

enigma,

turing,

History,

Biography,

UK,

computer,

Mathematics,

alan turing,

computing,

Add a tag

By Jack Copeland

Three words to sum up Alan Turing? Humour. He had an impish, irreverent and infectious sense of humour. Courage. Isolation. He loved to work alone. Reading his scientific papers, it is almost as though the rest of the world — the busy community of human minds working away on the same or related problems — simply did not exist. Turing was determined to do it his way. Three more words? A patriot. Unconventional — he was uncompromisingly unconventional, and he didn’t much care what other people thought about his unusual methods. A genius. Turing’s brilliant mind was sparsely furnished, though. He was a Spartan in all things, inner and outer, and had no time for pleasing décor, soft furnishings, superfluous embellishment, or unnecessary words. To him what mattered was the truth. Everything else was mere froth. He succeeded where a better furnished, wordier, more ornate mind might have failed. Alan Turing changed the world.

What would it have been like to meet him? Turing was tallish (5 feet 10 inches) and broadly built. He looked strong and fit. You might have mistaken his age, as he always seemed younger than he was. He was good looking, but strange. If you came across him at a party you would notice him all right. In fact you might turn round and say “Who on earth is that?” It wasn’t just his shabby clothes or dirty fingernails. It was the whole package. Part of it was the unusual noise he made. This has often been described as a stammer, but it wasn’t. It was his way of preventing people from interrupting him, while he thought out what he was trying to say. Ah – Ah – Ah – Ah – Ah. He did it loudly.

If you crossed the room to talk to him, you’d probably find him gauche and rather reserved. He was decidedly lah-di-dah, but the reserve wasn’t standoffishness. He was a man of few words, shy. Polite small talk did not come easily to him. He might if you were lucky smile engagingly, his blue eyes twinkling, and come out with something quirky that would make you laugh. If conversation developed you’d probably find him vivid and funny. He might ask you, in his rather high-pitched voice, whether you think a computer could ever enjoy strawberries and cream, or could make you fall in love with it. Or he might ask if you can say why a face is reversed left to right in a mirror but not top to bottom.

Once you got to know him Turing was fun — cheerful, lively, stimulating, comic, brimming with boyish enthusiasm. His raucous crow-like laugh pealed out boisterously. But he was also a loner. “Turing was always by himself,” said codebreaker Jerry Roberts: “He didn’t seem to talk to people a lot, although with his own circle he was sociable enough.” Like everyone else Turing craved affection and company, but he never seemed to quite fit in anywhere. He was bothered by his own social strangeness — although, like his hair, it was a force of nature he could do little about. Occasionally he could be very rude. If he thought that someone wasn’t listening to him with sufficient attention he would simply walk away. Turing was the sort of man who, usually unintentionally, ruffled people’s feathers — especially pompous people, people in authority, and scientific poseurs. He was moody too. His assistant at the National Physical Laboratory, Jim Wilkinson, recalled with amusement that there were days when it was best just to keep out of Turing’s way. Beneath the cranky, craggy, irreverent exterior there was an unworldly innocence though, as well as sensitivity and modesty.

Turing died at the age of only 41. His ideas lived on, however, and at the turn of the millennium Time magazine listed him among the twentieth century’s 100 greatest minds, alongside the Wright brothers, Albert Einstein, DNA busters Crick and Watson, and the discoverer of penicillin, Alexander Fleming. Turing’s achievements during his short life were legion. Best known as the man who broke some of Germany’s most secret codes during the war of 1939-45, Turing was also the father of the modern computer. Today, all who click, tap or touch to open are familiar with the impact of his ideas. To Turing we owe the brilliant innovation of storing applications, and all the other programs necessary for computers to do our bidding, inside the computer’s memory, ready to be opened when we wish. We take for granted that we use the same slab of hardware to shop, manage our finances, type our memoirs, play our favourite music and videos, and send instant messages across the street or around the world. Like many great ideas this one now seems as obvious as the wheel and the arch, but with this single invention — the stored-program universal computer — Turing changed the way we live. His universal machine caught on like wildfire; today personal computer sales hover around the million a day mark. In less than four decades, Turing’s ideas transported us from an era where ‘computer’ was the term for a human clerk who did the sums in the back office of an insurance company or science lab, into a world where many young people have never known life without the Internet.

B. Jack Copeland is the Director of the Turing Archive for the History of Computing, and author of Turing: Pioneer of the Information Age, Alan Turing’s Electronic Brain, and Colossus. He is the editor of The Essential Turing. Read the new revelations about Turing’s death after Copeland’s investigation into the inquest.

Visit the Turing hub on the Oxford University Press UK website for the latest news in theCentenary year. Read our previous posts on Alan Turing including: “Maurice Wilkes on Alan Turing” by Peter J. Bentley, “Turing : the irruption of Materialism into thought” by Paul Cockshott, “Alan Turing’s Cryptographic Legacy” by Keith M. Martin, and “Turing’s Grand Unification” by Cristopher Moore and Stephan Mertens, “Computers as authors and the Turing Test” by Kees van Deemter, and “Alan Turing, Code-Breaker” by Jack Copeland.

For more information about Turing’s codebreaking work, and to view digital facsimiles of declassified wartime ‘Ultra’ documents, visit The Turing Archive for the History of Computing. There is also an extensive photo gallery of Turing and his war at www.the-turing-web-book.com.

Subscribe to the OUPblog via email or RSS.

Subscribe to only British history articles on the OUPblog via email or RSS.

View more about this book on the

By: Nicola,

on 11/25/2011

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

Technology,

Social Networking,

VSI,

mobile phone,

tablet,

desktop,

cloud computing,

computer science,

smartphone,

computing,

A Very Short Introduction,

*Featured,

Science & Medicine,

darrel ince,

zittrain,

Add a tag

By Darrel Ince

I’m typing this blog entry on a desktop computer. It’s two years old, but I’m already looking at it and my laptop wondering how long they will be around in their current form. There are three fast-moving trends that may change computing over the next five years, affect the way that we use computers, and perhaps make desktop and laptop computers the computing equivalent of the now almost defunct record player.

The first trend is that the computer and the mobile phone are converging. If you use one of the new generation of smartphones—an iPhone for example— you are not only able to send and receive phone calls, but also carry out computer-related tasks such as reading email and browsing the web. This convergence has also embraced a new generation of computers known as tablet computers. These are light, thin, contain a relatively small amount of memory and, again, implement many of the facilities that are on my desktop and laptop computers.

The second trend is that the use of the computer is changing. New generations of users are accessing web sites such as Facebook, Twitter and Digg. These social networking sites have become either a substitute or an add-on to normal interaction. Moreover recent figures indicate that there has been a major shift in the use of email facilities from the home computer to the smartphone and tablet computer.

The third trend is that data and software are moving from the computer on the desk or on the lap to the Internet. A commercial example is the company Salesforce.com. This is a successful company whose main business is customer relationship management: the process of keeping in touch with a customer; for example, tracking their orders and ensuring that they are happy with the service they are receiving. Salesforce.com keep much of their data and software on a number of Internet-based servers and their customers use the web to run their business. In the past customer relationship systems had to be bought as software, installed on a local computer, and then maintained by the buyer. This new model of doing business (something known as cloud computing) overturns this idea.

The third trend, cloud computing, is also infiltrating the home use of computing. Google Inc. has implemented a series of office products such as a word processor, a calendar program and a spread-sheet program that can only be accessed over the Internet, with documents stored remotely—not on the computer that accesses the documents.

So, the future looks to be configured around users employing smart-phones and tablets to access the Internet for all their needs, with desktop and laptop computers being confined to specialist areas such as systems development, film editing, games programming and financial number crunching. Technically there are few obstacles in the way of this: the cost of computer circuits drops every year; and the inexorable increase in broadband speeds and advances in silicon technology mean that more and more electronics can be packed into smaller and smaller spaces.

There is, however, a major issue that has been explored by three writers: Nicholas Carr, Tim Wu and Jonathan Zittrain. Carr, in his book The Big Switch, uses a series of elegant analogies to show that computing is heading towards becoming a utility. The book first provides a history of the electrical generation industry where, in the early days, companies had their own generator; however, eventually due to the efforts of Thomas Edison and Samuel Insull, power become centralised with utility companies delivering electricity to consumers over a grid. The book then describes how this is happening with the Internet. It describes the birth of cloud computing, where all software and data is stored on the Internet and where the computer could be downgraded to a simple consumer device with little if any storage and only the ability to access the World Wide Web.

Zittrain, in his book The Future of the Internet and How to Stop It

People at drop-in time who are just learning to use email have been asking me if I know what “the cloud” is lately. I assume the NY Times wrote something about it. I know it well enough to explain it to someone who also doesn’t know what Bcc is, but I wasn’t sure I understood it enough to be talking to other librarians about it. Here is a good First Monday article that spells out a lot of it: Where is the cloud? Geography, economics, environment, and jurisdiction in cloud computing. Some more discussion about how this affects libraries from the latest Library 2.0 Gang podcast. [thanks justin!]

Can it be defined in one sentence? Maybe three sentences?

… Cloud ?

Nothing is impossible, like definitions of a noun or even a verb in one or three sentenses.

I came across such a question when someone was asking about “WHAT IF I HAD TEN MILLION DOLLARS WITH ME, WHAT WOULD I DO WITH IT”.

This type of question is almost similar to questions like “WHO IS GOD, PLEASE.”

However nowadays people prefers instant personal answers that they can do battles with as in the MSN Sports BATTLES.

We are definitly now in the open arena, as in the challenging era, in pursuit of better than the best. Doubtless it is going towards a crisis or challenge that is heading for another chaotic state.

And, obviously it will be fast and furious, but do enjoy the trip when it is well managed or organized.

IN one sentance is ‘”The simplest proper answer would always be … “SEEK in the proper dictionary.”‘

-ray.

[...] to Jessamyn West from librarian.net for pointing out Where is the cloud? Geography, economics, environment, and [...]