new posts in all blogs

Viewing: Blog Posts Tagged with: Computer Graphics, Most Recent at Top [Help]

Results 1 - 25 of 42

How to use this Page

You are viewing the most recent posts tagged with the words: Computer Graphics in the JacketFlap blog reader. What is a tag? Think of a tag as a keyword or category label. Tags can both help you find posts on JacketFlap.com as well as provide an easy way for you to "remember" and classify posts for later recall. Try adding a tag yourself by clicking "Add a tag" below a post's header. Scroll down through the list of Recent Posts in the left column and click on a post title that sounds interesting. You can view all posts from a specific blog by clicking the Blog name in the right column, or you can click a 'More Posts from this Blog' link in any individual post.

"Symphony of Two Minds" is a short film about CG animation finding its own style amid a variety of influences. (Link to YouTube)

It begins with two cartoon characters eating a meal in an aristocratic dining parlor. They remark on how sophisticated their world is. It is visually sumptuous indeed, with hand-held photographic camera work and richly rendered textures.

But the low-class young man hasn't fully elevated himself from his origins in a hyper 2D anime universe, and he keeps experiencing flashbacks to it.

Director Valere Amirault says: "How do we choose to mix influences when dealing with a medium as new as CG animation? From live action independent movies to Japanese anime, CG animation is still a new form of media trying to find its own style, to differentiate itself from traditional cartoons."

-----

Via Cartoon Brew

Where are we headed with augmented reality? This short film by Keiichi Matsuda presents an unsettling vision of a possible future. The film superimposes digital animations over a mundane live action video showing a person's point of view as they ride a bus and shop for food. (Link to Vimeo)

Apps address us as personal assistants. Rewards and bonuses tally up like in a video game. Ads and offers leap out from products. Guidelines appear on sidewalks. The person interacts with this hybrid reality by using voice and hand gestures.

At the website

Hyper Reality, Mr. Matsuda says: "Our physical and virtual realities are becoming increasingly intertwined. Technologies such as VR, augmented reality, wearables, and the internet of things are pointing to a world where technology will envelop every aspect of our lives. It will be the glue between every interaction and experience, offering amazing possibilities, while also controlling the way we understand the world. Hyper-Reality attempts to explore this exciting but dangerous trajectory. It was crowdfunded, and shot on location in Medellín, Colombia."

----

via

Cartoon Brew

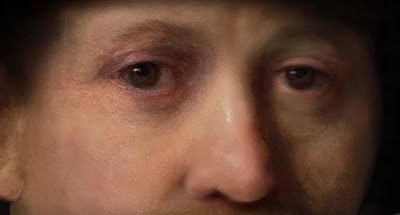

A team of scientists used statistical analysis, deep learning algorithms, and 3D printers to create an image that is intended to look like a typical Rembrandt.

Here's how they did it (Link to YouTube)

Read about the process on The GuardianThanks, Dan

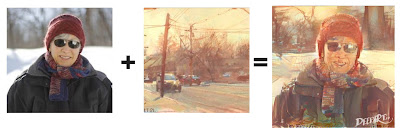

Can a computer learn to paint with your style?

DeepArt is a free online computer algorithm that claims to transform your photos into painterly images, using your own painting style to guide the computer.

I thought I'd try it out by uploading a photo (left) and a closely related plein-air oil painting (right) to see what the algorithm comes up with.

After waiting about 10 hours, I got an email saying my image was ready:

The result is pretty disappointing, with strange dark slashes in the sky that weren't in either the photo or the painting. The rest of the building looks really crudely painted.

I tried it once more with a photo and a painting that were really different.

The output uses the color scheme of the painting, and grafts paint-like textures to match the relative values. Beyond that it didn't make any choices that I would regard as artistic. Although the result is marginally more interesting than a Photoshop filter, it doesn't look like a painting. The algorithm doesn't do well with faces, which require a particular attention to the eyes and mouth.

Despite the shortcomings of this algorithm, it's easy to imagine the power of future software that not only patches together stylistic fingerprints, but also uses strategies of

machine perception and

image parsing.

----

Related posts:

By: KatherineS,

on 11/13/2015

Blog:

OUPblog

(

Login to Add to MyJacketFlap)

JacketFlap tags:

tv,

science,

transport,

VSI,

physics,

synthetic,

peter atkins,

vegetation,

VSI online,

syllabus,

nature,

Medical Mondays,

biology,

chemistry,

agriculture,

energy,

entropy,

Very Short Introductions,

computer graphics,

atom,

chemical reactions,

A Very Short Introduction,

*Featured,

Physics & Chemistry,

molecule,

Science & Medicine,

Chemistry Week,

Chemistry Week 2015,

Add a tag

What is all around us, terrifies a lot of people, but adds enormously to the quality of life? Answer: chemistry. Almost everything that happens in the world, in transport, throughout agriculture and industry, to the flexing of a muscle and the framing of a thought involves chemical reactions in which one substance changes into another.

The post The case for chemistry appeared first on OUPblog.

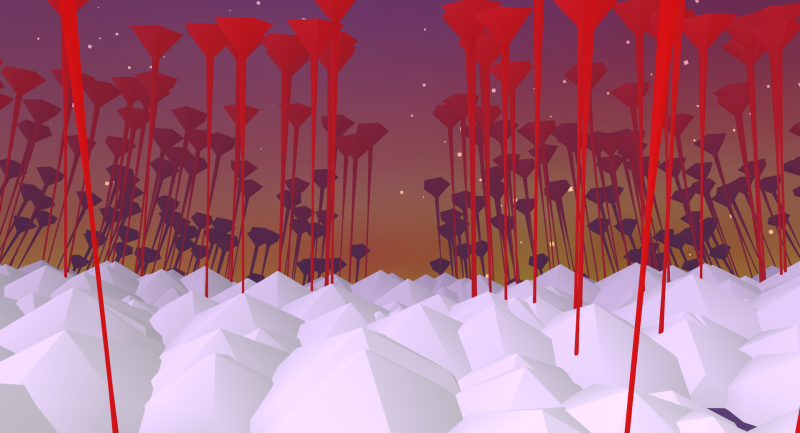

Panoramical is a music visualizer that creates moving images tied to a music track. Here's the trailer.

But it's also a game that lets users customize various parameters of experience, resulting in something that resembles electronic lucid dreaming, or interactive hallucinogenic synesthesia.

It was created by Argentine DJ, visualist, and programmer Fernando Ramallo and Proteus co-creator David Kanaga.

Via BoingBoingGurneyJourney YouTube channelMy Public Facebook pageGurneyJourney on PinterestJamesGurney Art on Instagram@GurneyJourney on Twitter

There's something mesmerizing about watching little dragons made of semi-viscous cookie batter falling helplessly into heaps and melting into each other. (

Link to YouTube)

At CTN Animation Expo I bought a copy of Robh Ruppel's new art book

Graphic L.A.

, and want to share it with you.

Robh is one of those rare artists whose work spans imaginative and observational painting. He has worked as a designer for video games and films, and has taught at Art Center. He has also been a leader in digital plein-air painting.

While the book contains some landscapes, the bulk of the images are urban scenes. What I like most about his work is his ability to find beauty in commonplace scenes.

The book includes a mix of finished paintings, thumbnail sketches and step-by-step sequences. The sketches are in tone, most often in marker, while the colored finished paintings appear to be all digital.

The sense of color and light in many of the painting is extremely evocative.

Accompanying the images are helpful chunks of advice, such as "Reduce, refine, interpret."

Before he commences a painting, he always explores the possibilities of the subject in two or three tones. "Good value design," he says, "is the clear simple arrangement of a few tones."

He says, "Searching out the composition should take as long as rendering the image. Ultimately, the staging is what tells the story."

The book is 144 pages, about 8x8 inches.

The new animated film Big Hero 6 uses a new rendering system developed at Disney Animation Studios that simulates the effect of light on surfaces with much more subtlety and nuance than in previous CGI animated films.

The rendering system, called Hyperion, manages the huge computational volume required for

ray tracing. In a ray-traced image, the graphics system tracks the behavior of light rays that interact with various kinds of surfaces before passing through the picture plane.

Any given light ray may bounce as many as 10 times, creating all sorts of secondary shadows, reflected light, or subsurface scattering. The inflatable robot character called Baymax is a perfect proof-of-concept for the rendering system because of all the internal scattering inside the vinyl skin.

Although the designers could have used this system for a photo-real image, they were very conscious of keeping to the stylized character of the animated world.

The film is set in an alternate universe of "

San Fransokyo." It not only had to combine design elements of east and west, but also had to be extremely detailed and layered to allow for some fly-through sequences.

The geometry was connected to an actual street grid of San Francisco, and the assets can be reused for future films and games.

Both the rendering software and the architectural generator put immense demands on the Disney supercomputers. Tech supervisor Andy Hendrickson said "This movie is more computationally complex than our last three movies combined."

In this video, Norm from Tested interviews Mr. Hendrickson about the techniques and challenges. (

link to video).

Book:

The Art of Big Hero 6 Ray tracing on Wikipedia

Ray tracing on WikipediaAll images ©Disney 2014

Speaking of animation, I'll be a speaker at CTN Animation Expo at Burbank in less than two weeks,

giving presentations about Color and Light and Imaginative Realism. Hope to meet you there.

Armand Baltazar, an artist and senior designer at Pixar, just inked a deal with HarperCollins for a three book illustrated series called "Timeless."

According to the Hollywood Reporter, the trilogy is "in the vein of Avatar and Harry Potter. The story centers on a boy — joined by a motley gang of friends — who is seeking to rescue his father from a Roman general after a 'time collision' has thrown together the past, present and future."

I asked Armand to share some insights into the inspiration, tools, and process behind his illustrations.

He says: "I would describe the look and feel of Timeless as an homage to my heroes from the golden age of illustration: NC Wyeth, Dean Cornwell, Norman Rockwell, Joseph Clement Coll to name a few, with classic influences that range from Ilya Repin, Anders Zorn, to Mariano Fortuny."

"I started out wanting to paint everything in oil-paint, but having to hold down a demanding full-time studio job made painting late nights and weekends too demanding and impractical. So now the majority of the work for the book is a blend of traditional and digital media."

"I begin with thumbnails. sometimes working all the way through to refined drawings or value comps. But often I'll resolve lighting design in my color keys. I will either scan the thumbnails and drawings into the computer or rough in a watercolor and gouache pass across the top and then scan that in."

I paint the paintings digitally using Photoshop and Painter. But I use these digital tools in a way that emulates watercolor, oil painting and traditional animation background painting. Using my drawing, and color-key as a guide, I paint from background to foreground."

"I often start blocking in the big graphic shapes of my composition, then my light and dark patterns leaving the shadows as thin simple statements and work towards opacity in the lights. The Flying car painting was painted more like a traditional watercolor saving my lights and working towards darker saturated values and color."

"I work on the painting in the computer using 2-3 simple ugly brushes that give me the tactile quality I'm looking for. I keep the painting flattened and overpaint and cut back over the shapes as I would in oil leaving the history of the brush decisions when possible."

"I especially love doing that when painting characters. Trying to get something that is a mix of Rockwell (painterly) and Frank Duveneck with nods towards impressionist color and light when the scene or lighting design calls for it."

Thanks for the insights, Armand, and best wishes on the project.

----

Before landing his job at Pixar, Armand has worked at ImageMovers Digital, Walt Disney Feature Animation, and DreamWorks SKG. He has worked on The Prince of Egypt, The Road to Eldorado, Spirit Stallion of the Cimmaron, Sinbad, Shark Tale, Flushed Away, The Bee Movie, Princess and the Frog, A Christmas Carol, and Cars 2.Armand Baltazar's website

(Direct link to YouTube video)

How does your body move when you breathe? Well, of course the rib cage expands and contracts, but surprisingly the movement is more up and down than it is in and out, says Michael Black, co-author of a computer graphics study presented at the recent Siggraph.

There are many more small but observable movements going on. The arms push out, the head goes back and forth, and the spine flexes. The movements are slightly different for "stomach breathing" compared to "chest breathing," something that singers are very conscious of. One possible flaw in the methodology of this study is that subjects were asked to "breathe normally," a sure way to make them breathe unnaturally.

Once you learn to recognize the subtle body changes that accompany breathing, you won't look at a posed model the same way, and you'll notice actors in movies controlling their breathing as part of their performance.

Animators will be able to input this breathing data with simple controls, including a spirometer, which records breathing volume. In the future, when actors record their voice parts for CG animated films, they'll be able to record their breath acting as well. That information will yield a more believable and lifelike performance, whether the character they're playing is a realistic human or a talking turnip.

The authors are: Tsoli, A., Mahmood, N. and Black, M.J.,

The paper is entitled: "Breathing Life into Shape: Capturing, Modeling and Animating 3D Human Breathing"

Here's a short 3D CGI film called "Navigation in Dreamtime."

The camera drifts through trippy dreamscapes, as ornate golden orbs and tendrils gently morph in shape, pattern, and color.

(Direct link to video) The animation is by Julius Horsthuis, who imagines that "It's 1596 AD and a ship is frozen in the deep arctic. No help is coming. The Navigators and Cartographers aboard, suffering from hypothermia and frostbite are starting to hallucinate."

Fractal-powered software is Mandelbulb 3D. Music is "Soul Medicine" by Mari Boine.

If you liked this, check out his "Phenotypic Sarcophagi."

Via CGBros.

Zsolt Ekho Farkas created this 3D interpretation of a painting by Hungarian painter

Benczúr Gyula (1844-1920).

The painting depicts the recapture of the Buda Palace in 1686 from Ottoman army. It took a month for Mr. Farkas to model all 32 figures, plus another five weeks to digitally paint and prepare the masks and layers. With the smoke and music, the moving-camera parallax, and the focus pulls, the scene jumps right off the canvas.

In big-budget live action movies, an increasingly important part of the CGI pipeline is a step called "postvisualization," which means a series of shots with low-res digital effects created after principle photography.

(Direct link to video) This documentary explains the advantages that postvis gives the director and editor to allow them to make good storytelling decisions and bid the effects accurately before the expensive rendering of the final shots.

----

Via CGBros

Blog reader Aaron Becker created the bestselling picture book

He told me that he builds digital maquettes before doing his finished illustrations in traditional watercolors.

I invited him to do a guest post explaining the process, so here it is:

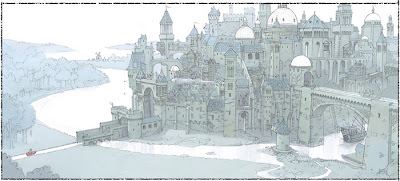

"Journey began as this large scale drawing, completed over the course of two afternoons three summers ago."

"Once the story had been roughed out, I returned to the original drawing and built this 3D model in Maya as a proof of concept – to see if I could replicate the spirit of the drawing as a detailed digital model. I would use this to help with perspective and lighting, and went on to build similar models for other environments in the book."

"At this stage, I’ve added significant detail to the 3D model with a new scanned pencil drawing. This light overlay gets printed out onto 300 LB Arches watercolor paper using my Epson large format printer."

"This allows me to dive into the watercolors with the confidence that my composition and perspective is spot-on. I prefer the heavy stock for watercolor as it minimizes warping and allows for fantastic control over the amount of moisture while applying pigment."

"From there, it’s a matter of old-school pen and ink and watercolor. I do the pen and ink first, making sure to give myself the freedom to change anything from the pencil underlay should the spirit move me. That said, I find the more I’ve decided conceptually ahead of time, the more I can concentrate on pure technique at any stage of the image-making game."

"Watercolor is applied in several washes from light to dark and then finished up with shadow details. I try to minimize the use of any masking fluid as I find this changes the quality of the paper for subsequent washes. Gouache was used for all of the punchy red and purple bits throughout the story."

"When it came time to create the book’s video trailer (see below), I went back into the 3D model and camera-mapped the watercolor painting onto the geometry, like a virtual slide projector covering a foam-core model. After separating the painting into several layers (background, towers, castle walls, etc) I was able to move a virtual camera in Maya and bring the image to life."

"Some details, like the waving flags were hand animated and projected onto transparent “cards” in Maya. This entire technique is similar to how matte paintings are now done in feature films. Having come from a film background, I wanted to utilize as much of the tricks I had learned as possible, while retaining the hand-made feel of the watercolor paintings. In the end, there are no shortcuts when it comes to dipping a brush into pigment and placing it on the page. Hope you all enjoy the finished product!"

JOURNEY by Aaron Becker (official trailer) from

Aaron Becker on

Vimeo.

Here's the book trailer Aaron created.

You can pick up the book online at:

In this remarkable demonstration, Daniel Cohen-Or and colleagues demonstrate a method of digitally inferring a three-dimensional solid from a 2D photo. (Direct link to video). This could be a big timesaver for digital modelers and matte painters.

--------

Thanks, Rob Nonstop

Here's some mesmerizing digital animation of pulsing, fluid-like forms timed to music, by Matthias Müller. (Video link)

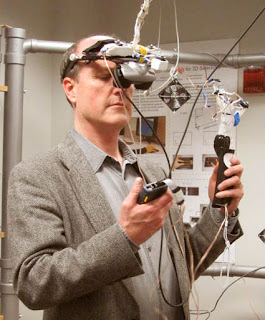

On Thursday I visited the Worcester Polytechnic Institute (WPI) in Massachusetts as a guest of the department of Interactive Media and Game Development (IMGD), where I gave a lecture on Worldbuilding.

On Thursday I visited the Worcester Polytechnic Institute (WPI) in Massachusetts as a guest of the department of Interactive Media and Game Development (IMGD), where I gave a lecture on Worldbuilding.

The IMGD program at WPI is designed to provide students with both programming expertise and art knowledge so that they're well rounded in their approach to interactive design.

One of the professors is Britt Snyder (left, with a Jordu Schell sculpt between us). Britt has worked as an artist in the field of video game development for the past 13 years, with clients like SONY, Blizzard, Liquid Entertainment, Rockstar, THQ, and many others.

One of the professors is Britt Snyder (left, with a Jordu Schell sculpt between us). Britt has worked as an artist in the field of video game development for the past 13 years, with clients like SONY, Blizzard, Liquid Entertainment, Rockstar, THQ, and many others.

He teaches 3D modeling, digital painting, and concept art.

WPI was one of the first to develop a program in game design, and is one of the top-ranked academic programs in the field. Since the department is part of a larger engineering school, there's always a focus on blending art and technology, with an eye on fostering close working relationships between artists and programmers.

Students get to jump right in and participate in hands-on projects and collaborations, creating games, virtual environments, interactive fiction, art installations, collaborative performances. They are encouraged to invent entirely new forms of media.

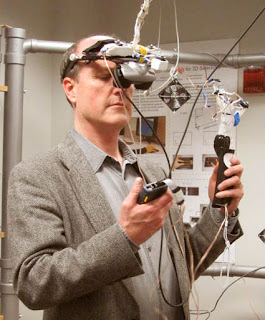

I was thrilled to be invited by PhD candidate

Jia Wang to try out the virtual reality mo-cap lab, dubbed "Phase Space."

I am wearing a stereoscopic head-mounted display and holding a tracking constellation (basically a souped up Wii controller with very precise tracking points).

The myriad sensors mounted on the outer frame follow the exact 3D movements of my head and hand-held wand, turning me into a St. George with a sword facing off against a dragon, or whatever.

Small fans mounted on the outer frame can generate the effect of wind, so that the player can feel completely immersed in a virtual environment.

------

(Direct link to video) One of the reasons I'm glad to be a traditional painter in a digital age is that there's so much to learn from my fellow artists who are on the cutting edge of computer graphics.

In this video, Epic's senior technical artist Alan Willard takes us on a walk-through demo of Unreal Engine 4, a digital toolset used by a lot of game developers. The game engine offers designers the ability to modify parameters and see how they look in real time.

Even if you don't use such tools or play such games, there's a lot to learn from the increasingly sophisticated vocabulary and way of thinking about the effects of light and smoke and surfaces and particles in the 3D game world.

(Link to YouTube video) Slit scan video transforms everyday scenes by elongating, compressing, and twisting elements into trippy dreamscapes. This one was made with an inexpensive Mac app.

Kamil Sladek explains how it works on Gizmodo:

You can make your own slit camera out of any video capable digital camera with a regular sensor and a regular lens. All you need to do is the following:

1. record a video of your action

2. extract each frame as an individual image (the opposite to what you would do for a time lapse)

3. extract a vertical single pixel wide line from each image (for example a line from the center)

4. stack those lines horizontally from left to right to form an actual "slit scan" image

This can be automated by tools like e.g. ImageMagick and the longer your initial video was, the wider your image will be. In fact, the width of your slit scan image will have exactly the same amount of pixels as your initial video's frame number.

Now, to go one step further you can proceed for all the other vertical lines of your images and create one slit scan image for each particular set of vertical lines. This will give you a set of as many slit scan images as your initial video was wide in pixels. Combining that set of slit scan images to a video (this time exactly as in a time lapse) your result can look like this.

Via BoingBoing

Thursday's post about the painting machine called Vangobot brought on a lively discussion about the similarities and differences between human painters and programmed machines.

As many of you observed in the comments, Vangobot executes physical paintings, but the results are only as good as the instructions it receives. As a result, it's easy to dismiss Vangobot as a kind of fancy inkjet printer.

Machines like Vangobot may develop the hand skills to manipulate the brushes and paints, but will they ever have artistic judgment? Is it possible for a computer to be programmed to see and interpret the world in the same way that an experienced painter does?

These are qualities of the "eye" or "mind" or even the "soul" more than the "hand." Blog reader M.P. invoked a quote from nineteenth century drawing instructor James Duffield Harding who characterized the great artist as having an instinct for "selection, arrangement, sentiment, and beauty" rather than just replicating reality.

Let's have a look at a photograph of two people in a parklike setting. How would an experienced painter transform this image?

Note the difference in this master painter's interpretation. The details in the faces are accurately drawn, but rougher brushes are used for the foliage. The drapery is painted efficiently with big slashing strokes. The sky is painted loosely with spots of light. The sidewalk tiles are in perspective, but they're just suggested with thin dashing strokes. The colors are warmed up and intensified.

For a computer to do this, it would have to understand what it was looking at, and have an instinct to do all these subjective interpretations. It would need to be able to handle a lot of brushes in a variety of ways depending on what forms it was painting.

Let's look another example, a photo of a landscape scene composed of sky, trees, water, and rock.

The experienced painter uses a variety of paint handling depending on material. The water uses long horizontal strokes, the rocks are done with flat brushes, and the ducks are painted carefully with small brushes.

Note how different this is than an off the shelf "

artistic paint daub filter" from Photoshop, which merely translates everything into blobby strokes indiscriminately across the whole image, without regard to the areas that are psychologically important, such as the faces.

Now what if I told you that the "master painter" of all of the examples is a computer?

The creators of the program are Kun Zeng, Mingtian Zhao, Caiming Xiong, Song-Chun Zhu from Lotus Hill Institute and University of California, Los Angeles. The goal for the software was to interpret photographs in painterly terms.

The process begins with "image parsing," where the scene is divided and grouped into various areas of unequal importance and of unequal character, such as foliage, branches, drapery, and faces. Each region of the painting has different meaning to a viewer and therefore requires a different paint handling. This visual meaning is known in the field of artificial intelligence as "image semantics."

The image parsing software works like the facial recognition system in a modern digital camera, but this system does it at a much more sophisticated level, recognizing and classifying various elements in categories such as:

face/skin, hair, cloth, sky/cloud, water surface, spindrift, mountain, road/building

rock, earth, wood/plastic metal, flower/fruit, grass, leaf, trunk/twig, background, and other.

Image parsing is similar to what a human artist does. Painter Armand Cabrera wrote about this recently in his post "

Learning to See."

The authors of the computer program assigned the computer to use a hierarchy of as many as 700 different brushes for each of these forms, with various settings for opacity (depending on whether it's painting a cloud or a rock), stroke direction, dryness and wetness, and, of course color.

The strokes are applied differently depending on the forms, and they're overlapped spatially, painting from the background to the foreground so that the objects in front "occlude" or paint across the ones behind.

The colors in the painterly images are shifted according to a statistical observation that the typical hue and chroma distribution of colors in photos (left) differ from those of paintings (right). Paintings have less blue and green, and more yellow, red, and magenta.

What does this mean for traditional painters? Should we welcome it or be worried? If this software is hooked up to a Vangobot, anyone could buy a really nice wedding portrait painted in oil from a decent wedding photo. A portable Vangobot with this software could start winning plein air competitions, just as computers won chess matches.

Is there some skill set that is out of reach of programmed machines? As Anonymous mused in the comments of the last post, "maybe it's along the lines of caricature, and the intensification of forms and space and color and emotions and beauty and mystery?" Will computers ever achieve the higher level judgments, what Harding referred to as "selection, arrangement, sentiment, and beauty?"

I would be inclined to say yes, yes, yes, and yes. Computers will learn to caricature and they'll do a good job at it. They will learn to paint science fiction and fantasy, and to do Van Gogh or Picasso transformations. Anything that can be deconstructed can be programmed. The more these computers advance, the better we understand what we do as painters. As blog reader Todd said it so well: "Robots will only be able to represent as much of humanity as we understand about ourselves."

Despite it all, I do believe that there is something elusive, some element of real genius in great artists that will always stay beyond the reach of materialistic or deconstructive analysis. The greatness of Mozart and Rembrandt and Shakespeare can never be matched by a computer. And for more earthbound practitioners like me, I can take comfort in the faith that other humans will always enjoy works that are filtered through the human consciousness and the human hand, just as we prize home cooking, hand knitting, wooden boats, and folk music.

I believe we should congratulate Kun Zeng, Mingtian Zhao, Caiming Xiong, and Song-Chun Zhu and applaud their accomplishment. These painting systems are not faceless robots, but the creations of amazingly bright people. The one thing that is certain is that these new systems will put certain kinds of artists out of business, they will redefine what we hand-skilled artists do, and the tools will bring to the table new creative opportunities that we can't even imagine yet.

-------

Thanks,

Jan Pospíšil for linking me to this paper.

Video showing Photoshops "

artistic filters,"

Previously:

Vangobot

Vangobot is a robotic painting machine that replicates a photo with real paint.

Vangobot can be programmed to simulate various Post-Impressionist and Pop Art styles. The output painting is created on a flatbed apparatus using actual brushes and pigment on canvas.

So far the images are limited by the initial photo and the image processing software, which has the look of an off-the-shelf Photoshop filter. In most of the examples that Vangobot has painted so far, there isn't much blending, so the result has a patchy, mechanical look.

Nevertheless, according to Wired magazine, whose

cover feature explores how robots will replace many skilled jobs, Vangobot has produced some pictures that were sold through the Crate and Barrel stores, "whose customers have no clue that a machine was the creative genius behind what's hanging on their wall."

Above painting by Vangobot. Future iterations of such painting machines could enlist more advanced image manipulation such as blending, glazing,

computer vision,

edge detection,

automated painting, and

abstraction generators. In theory it could be set to the task of directly interpreting a 3D view alongside human plein air painters.

---

Previously on GJ

Computer VisionAutomated PaintingAbstraction generatorEdge detectionRandom image generation

(

Direct link to video) Creating the environment for the science fiction movie Prometheus involved many layers of 3D digital data, some of which came from Google Earth. Visual Effects Supervisor Richard Stammers explains how he used a digital scans of a desolate location called Wadi Rum in Jordan as a starting point for constructing the alien planet. "Google Earth became an important tool for any of our previs and shoot planning," he says.

From CG Wires-----

This Saturday, I'll be speaking at MICA in Baltimore in connection with the annual meeting of ASAI, the American Society of Architectural Illustrators. If you'd like to look into attending,

here's more info.

These face-like patterns are what you get if you combine a random polygon generator with A.I. facial recognition software.

Instead of using face recognition software, it would be even more interesting to channel the multiple generation feedback loop in real time through the face-recognition areas of an individual human's brain. Depending on how you set things up, it could be a hallucinatory experience, leading either to kittens, monsters, familiar faces, or maybe Alfred E. Neuman.

Code by

Phil McCarthy, Prosthetic Knowledgevia

BoingBoingPreviously on GJ:

Pareidolia and ApopheniaAbstraction Generator

Yesterday was the last day of the annual convention of the Association of Medical Illustrators in Toronto, Ontario, Canada. I attended the gathering as a guest lecturer and workshop presenter.

(Above: Bert Oppenheim) The AMI includes professional visualizers who create artwork that shows what is going on inside the body. The artwork must be scientifically accurate and clear in its explanatory purpose, for people's lives often depend on it.

The images appear in textbooks, magazines, courtrooms, museums, digital readers, and doctor's offices. These days, most of the work is digital, including 2D, 3D, and animation. About half of the 2000 trained practitioners are self-employed.

Members have traveled from as far away as Russia to attend this convention, but most hail from the USA and Canada. The handful of universities that offer accredited graduate programs in biomedical illustration include

Georgia Health Sciences University, University of Illinois, Johns Hopkins University School of Medicine in Baltimore, Rochester Institute of Technology, the University of Texas Southwestern Medical Center in Dallas, and the University of Toronto.The training includes rigorous work not only in traditional and digital rendering techniques, but also in dissection and an array of life science studies. It's a very interesting field for young artists to consider if they are looking for something that combines art and science.

Thanks, AMI, for inviting me and for being such great hosts and workshop attendees!

----

Association of Medical Illustrators.AMI's FAQ

View Next 16 Posts

So much of these movies is animated, they could qualify as animation features half of the time!

This is hollywood. Nothing is real.

If only they would spend some time creating quality STORIES.

This comment has been removed by the author.